Local Graph Clustering Beyond Cheeger's Inequality

Paper and Code

Nov 07, 2013

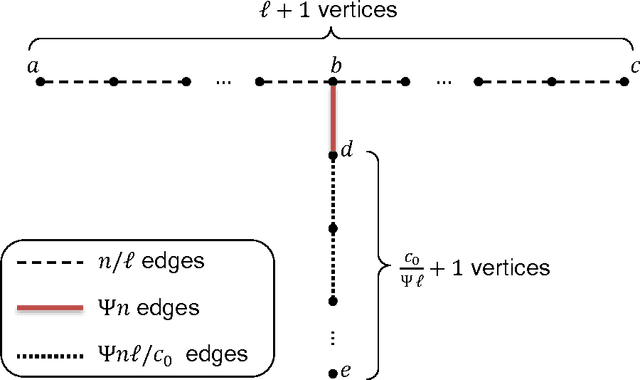

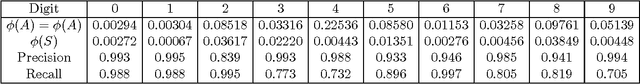

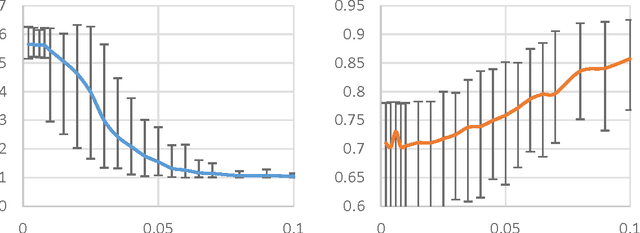

Motivated by applications of large-scale graph clustering, we study random-walk-based LOCAL algorithms whose running times depend only on the size of the output cluster, rather than the entire graph. All previously known such algorithms guarantee an output conductance of $\tilde{O}(\sqrt{\phi(A)})$ when the target set $A$ has conductance $\phi(A)\in[0,1]$. In this paper, we improve it to $$\tilde{O}\bigg( \min\Big\{\sqrt{\phi(A)}, \frac{\phi(A)}{\sqrt{\mathsf{Conn}(A)}} \Big\} \bigg)\enspace, $$ where the internal connectivity parameter $\mathsf{Conn}(A) \in [0,1]$ is defined as the reciprocal of the mixing time of the random walk over the induced subgraph on $A$. For instance, using $\mathsf{Conn}(A) = \Omega(\lambda(A) / \log n)$ where $\lambda$ is the second eigenvalue of the Laplacian of the induced subgraph on $A$, our conductance guarantee can be as good as $\tilde{O}(\phi(A)/\sqrt{\lambda(A)})$. This builds an interesting connection to the recent advance of the so-called improved Cheeger's Inequality [KKL+13], which says that global spectral algorithms can provide a conductance guarantee of $O(\phi_{\mathsf{opt}}/\sqrt{\lambda_3})$ instead of $O(\sqrt{\phi_{\mathsf{opt}}})$. In addition, we provide theoretical guarantee on the clustering accuracy (in terms of precision and recall) of the output set. We also prove that our analysis is tight, and perform empirical evaluation to support our theory on both synthetic and real data. It is worth noting that, our analysis outperforms prior work when the cluster is well-connected. In fact, the better it is well-connected inside, the more significant improvement (both in terms of conductance and accuracy) we can obtain. Our results shed light on why in practice some random-walk-based algorithms perform better than its previous theory, and help guide future research about local clustering.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge