Local Gaussian process extrapolation for BART models with applications to causal inference

Paper and Code

Apr 23, 2022

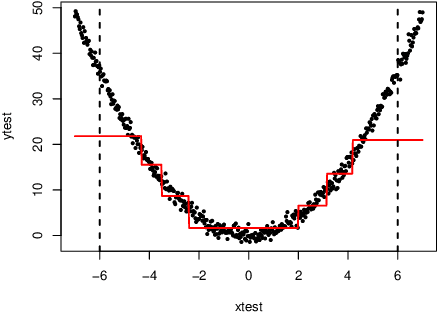

Bayesian additive regression trees (BART) is a semi-parametric regression model offering state-of-the-art performance on out-of-sample prediction. Despite this success, standard implementations of BART typically provide inaccurate prediction and overly narrow prediction intervals at points outside the range of the training data. This paper proposes a novel extrapolation strategy that grafts Gaussian processes to the leaf nodes in BART for predicting points outside the range of the observed data. The new method is compared to standard BART implementations and recent frequentist resampling-based methods for predictive inference. We apply the new approach to a challenging problem from causal inference, wherein for some regions of predictor space, only treated or untreated units are observed (but not both). In simulations studies, the new approach boasts superior performance compared to popular alternatives, such as Jackknife+.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge