Lifted Model Checking for Relational MDPs

Paper and Code

Jun 22, 2021

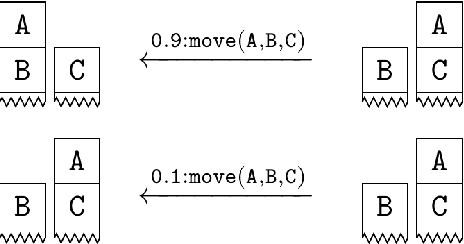

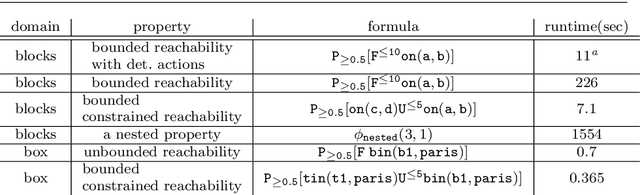

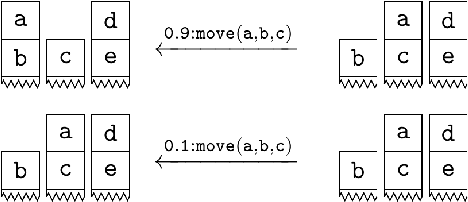

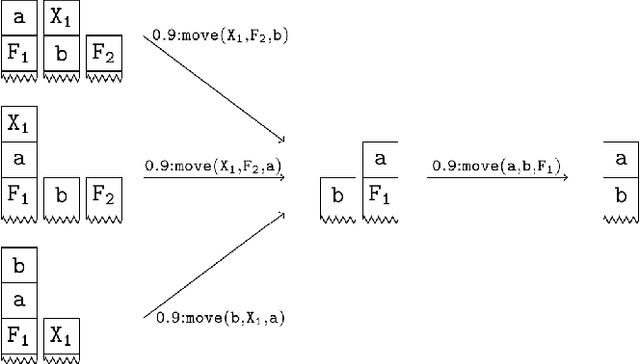

Model checking has been developed for verifying the behaviour of systems with stochastic and non-deterministic behavior. It is used to provide guarantees about such systems. While most model checking methods focus on propositional models, various probabilistic planning and reinforcement frameworks deal with relational domains, for instance, STRIPS planning and relational Markov Decision Processes. Using propositional model checking in relational settings requires one to ground the model, which leads to the well known state explosion problem and intractability. We present pCTL-REBEL, a lifted model checking approach for verifying pCTL properties on relational MDPs. It extends REBEL, the relational Bellman update operator, which is a lifted value iteration approach for model-based relational reinforcement learning, toward relational model-checking. PCTL-REBEL is lifted, which means that rather than grounding, the model exploits symmetries and reasons at an abstract relational level. Theoretically, we show that the pCTL model checking approach is decidable for relational MDPs even for possibly infinite domains provided that the states have a bounded size. Practically, we contribute algorithms and an implementation of lifted relational model checking, and we show that the lifted approach improves the scalability of the model checking approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge