LIFT-CAM: Towards Better Explanations for Class Activation Mapping

Paper and Code

Feb 10, 2021

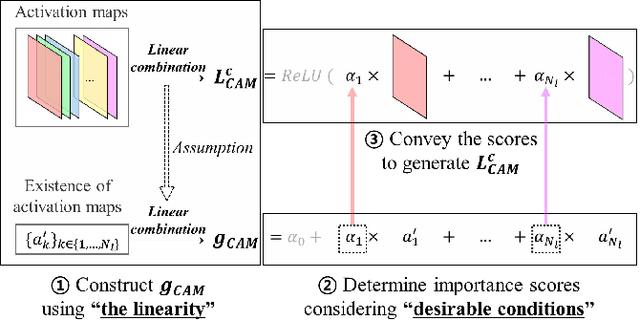

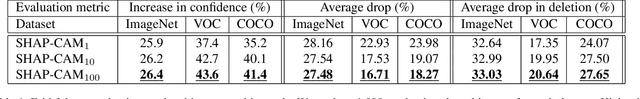

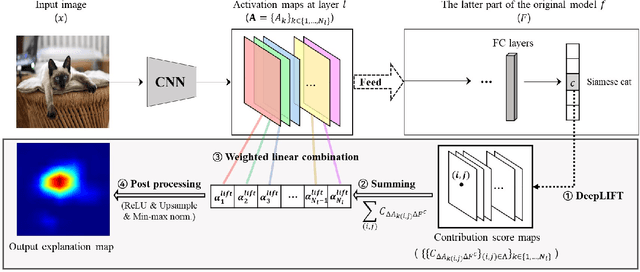

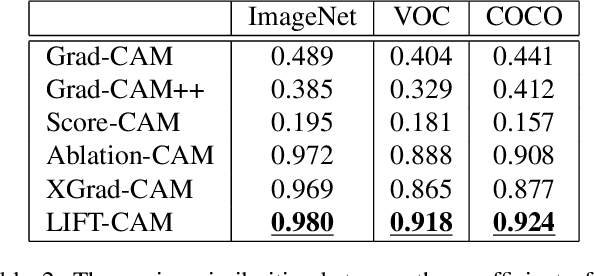

Increasing demands for understanding the internal behaviors of convolutional neural networks (CNNs) have led to remarkable improvements in explanation methods. Particularly, several class activation mapping (CAM) based methods, which generate visual explanation maps by a linear combination of activation maps from CNNs, have been proposed. However, the majority of the methods lack a theoretical basis in how to assign their weighted linear coefficients. In this paper, we revisit the intrinsic linearity of CAM w.r.t. the activation maps. Focusing on the linearity, we construct an explanation model as a linear function of binary variables which denote the existence of the corresponding activation maps. With this approach, the explanation model can be determined by the class of additive feature attribution methods which adopts SHAP values as a unified measure of feature importance. We then demonstrate the efficacy of the SHAP values as the weight coefficients for CAM. However, the exact SHAP values are incalculable. Hence, we introduce an efficient approximation method, referred to as LIFT-CAM. On the basis of DeepLIFT, our proposed method can estimate the true SHAP values quickly and accurately. Furthermore, it achieves better performances than the other previous CAM-based methods in qualitative and quantitative aspects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge