LFPS-Net: a lightweight fast pulse simulation network for BVP estimation

Paper and Code

Jun 25, 2022

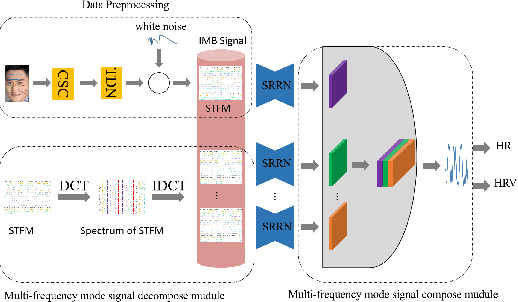

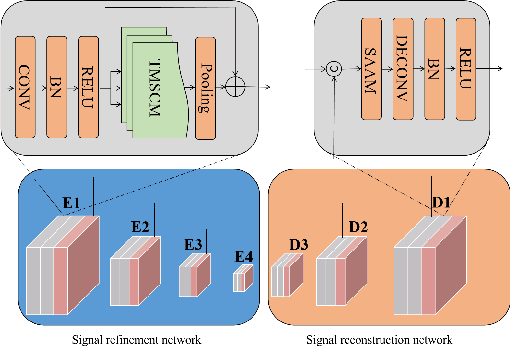

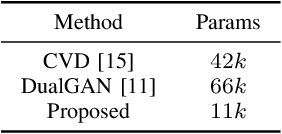

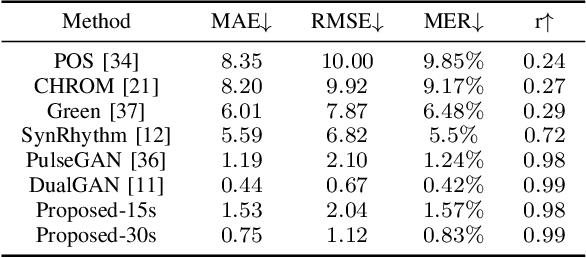

Heart rate estimation based on remote photoplethysmography plays an important role in several specific scenarios, such as health monitoring and fatigue detection. Existing well-established methods are committed to taking the average of the predicted HRs of multiple overlapping video clips as the final results for the 30-second facial video. Although these methods with hundreds of layers and thousands of channels are highly accurate and robust, they require enormous computational budget and a 30-second wait time, which greatly limits the application of the algorithms to scale. Under these cicumstacnces, We propose a lightweight fast pulse simulation network (LFPS-Net), pursuing the best accuracy within a very limited computational and time budget, focusing on common mobile platforms, such as smart phones. In order to suppress the noise component and get stable pulse in a short time, we design a multi-frequency modal signal fusion mechanism, which exploits the theory of time-frequency domain analysis to separate multi-modal information from complex signals. It helps proceeding network learn the effective fetures more easily without adding any parameter. In addition, we design a oversampling training strategy to solve the problem caused by the unbalanced distribution of dataset. For the 30-second facial videos, our proposed method achieves the best results on most of the evaluation metrics for estimating heart rate or heart rate variability compared to the best available papers. The proposed method can still obtain very competitive results by using a short-time (~15-second) facail video.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge