Leveraging Product as an Activation Function in Deep Networks

Paper and Code

Oct 19, 2018

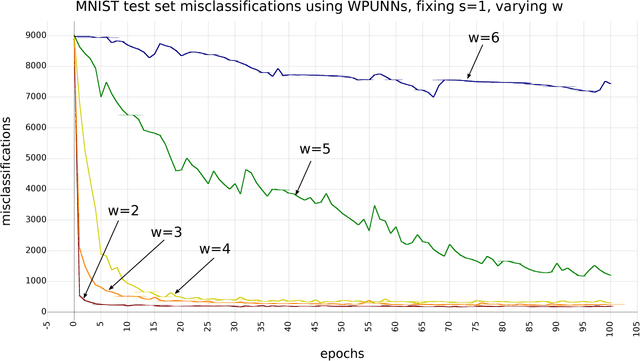

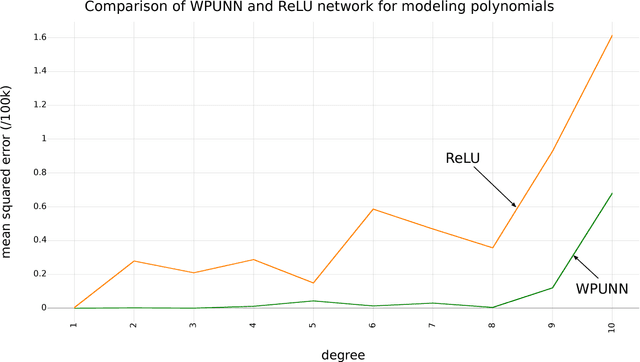

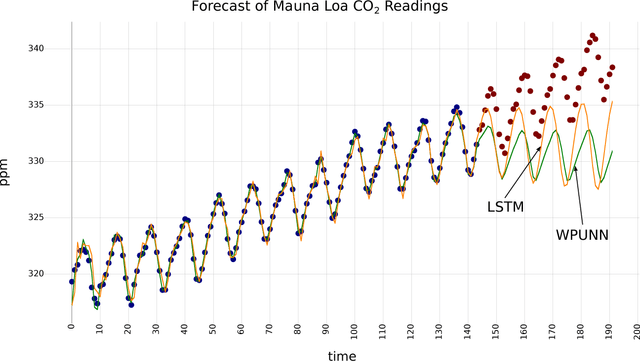

Product unit neural networks (PUNNs) are powerful representational models with a strong theoretical basis, but have proven to be difficult to train with gradient-based optimizers. We present windowed product unit neural networks (WPUNNs), a simple method of leveraging product as a nonlinearity in a neural network. Windowing the product tames the complex gradient surface and enables WPUNNs to learn effectively, solving the problems faced by PUNNs. WPUNNs use product layers between traditional sum layers, capturing the representational power of product units and using the product itself as a nonlinearity. We find the result that this method works as well as traditional nonlinearities like ReLU on the MNIST dataset. We demonstrate that WPUNNs can also generalize gated units in recurrent neural networks, yielding results comparable to LSTM networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge