Learning without recall in directed circles and rooted trees

Paper and Code

Nov 27, 2016

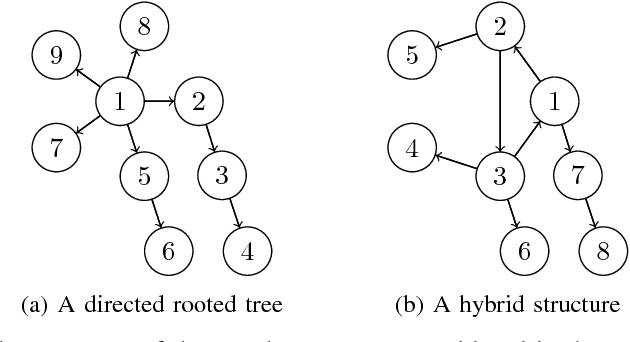

This work investigates the case of a network of agents that attempt to learn some unknown state of the world amongst the finitely many possibilities. At each time step, agents all receive random, independently distributed private signals whose distributions are dependent on the unknown state of the world. However, it may be the case that some or any of the agents cannot distinguish between two or more of the possible states based only on their private observations, as when several states result in the same distribution of the private signals. In our model, the agents form some initial belief (probability distribution) about the unknown state and then refine their beliefs in accordance with their private observations, as well as the beliefs of their neighbors. An agent learns the unknown state when her belief converges to a point mass that is concentrated at the true state. A rational agent would use the Bayes' rule to incorporate her neighbors' beliefs and own private signals over time. While such repeated applications of the Bayes' rule in networks can become computationally intractable, in this paper, we show that in the canonical cases of directed star, circle or path networks and their combinations, one can derive a class of memoryless update rules that replicate that of a single Bayesian agent but replace the self beliefs with the beliefs of the neighbors. This way, one can realize an exponentially fast rate of learning similar to the case of Bayesian (fully rational) agents. The proposed rules are a special case of the Learning without Recall.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge