Learning to Skip Ineffectual Recurrent Computations in LSTMs

Paper and Code

Nov 29, 2018

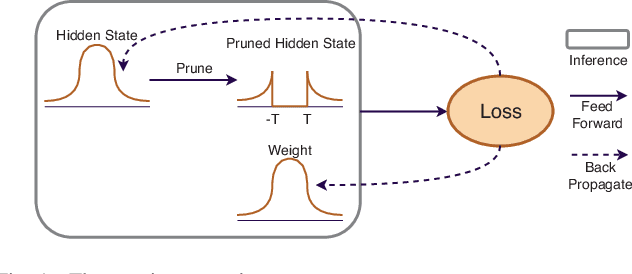

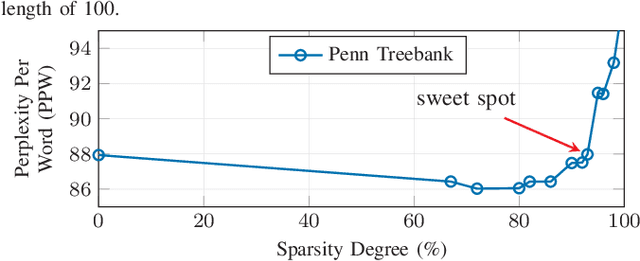

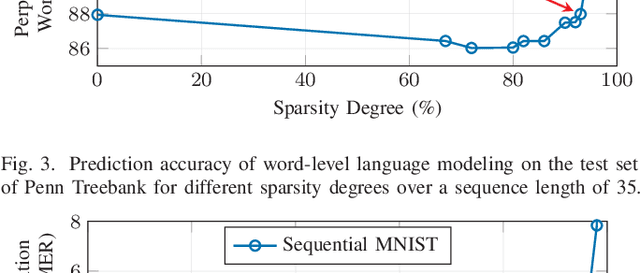

Long Short-Term Memory (LSTM) is a special class of recurrent neural network, which has shown remarkable successes in processing sequential data. The typical architecture of an LSTM involves a set of states and gates: the states retain information over arbitrary time intervals and the gates regulate the flow of information. Due to the recursive nature of LSTMs, they are computationally intensive to deploy on edge devices with limited hardware resources. To reduce the computational complexity of LSTMs, we first introduce a method that learns to retain only the important information in the states by pruning redundant information. We then show that our method can prune over 90% of information in the states without incurring any accuracy degradation over a set of temporal tasks. This observation suggests that a large fraction of the recurrent computations are ineffectual and can be avoided to speed up the process during the inference as they involve noncontributory multiplications/accumulations with zero-valued states. Finally, we introduce a custom hardware accelerator that can perform the recurrent computations using both sparse and dense states. Experimental measurements show that performing the computations using the sparse states speeds up the process and improves energy efficiency by up to 5.2x when compared to implementation results of the accelerator performing the computations using dense states.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge