Learning to Share Autonomy Across Repeated Interaction

Paper and Code

Jul 20, 2021

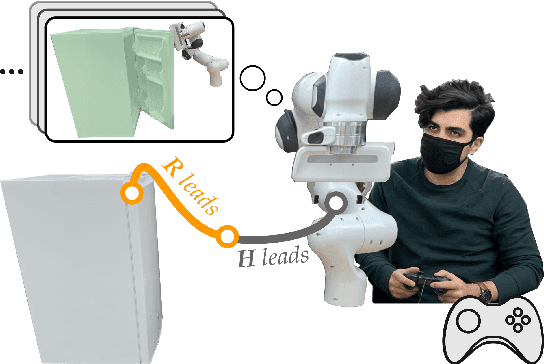

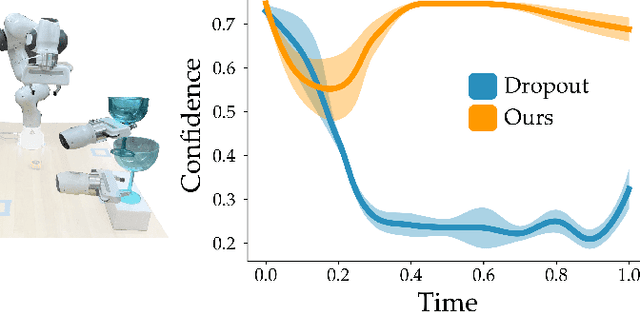

Wheelchair-mounted robotic arms (and other assistive robots) should help their users perform everyday tasks. One way robots can provide this assistance is shared autonomy. Within shared autonomy, both the human and robot maintain control over the robot's motion: as the robot becomes confident it understands what the human wants, it increasingly intervenes to automate the task. But how does the robot know what tasks the human may want to perform in the first place? Today's shared autonomy approaches often rely on prior knowledge: for example, the robot must know the set of possible human goals a priori. In the long-term, however, this prior knowledge will inevitably break down -- sooner or later the human will reach for a goal that the robot did not expect. In this paper we propose a learning approach to shared autonomy that takes advantage of repeated interactions. Learning to assist humans would be impossible if they performed completely different tasks at every interaction: but our insight is that users living with physical disabilities repeat important tasks on a daily basis (e.g., opening the fridge, making coffee, and having dinner). We introduce an algorithm that exploits these repeated interactions to recognize the human's task, replicate similar demonstrations, and return control when unsure. As the human repeatedly works with this robot, our approach continually learns to assist tasks that were never specified beforehand: these tasks include both discrete goals (e.g., reaching a cup) and continuous skills (e.g., opening a drawer). Across simulations and an in-person user study, we demonstrate that robots leveraging our approach match existing shared autonomy methods for known goals, and outperform imitation learning baselines on new tasks. See videos here: https://youtu.be/NazeLVbQ2og

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge