Learning to Roam Free from Small-Space Autonomous Driving with A Path Planner

Paper and Code

Mar 17, 2018

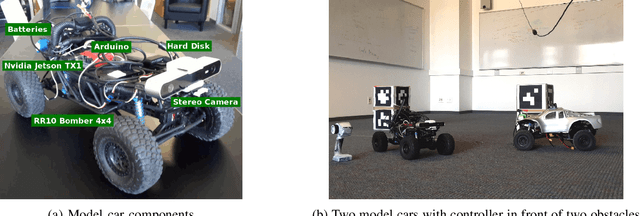

Modern autonomous driving algorithms often rely on learning the mapping from visual inputs to steering actions from human driving data in a variety of scenarios and visual scenes. The required data collection is not only labor intensive, but such data are often noisy, inconsistent, and inflexible, as there is no differentiation between good and bad drivers, or between different driving intentions. We propose a new autonomous driving approach that learns roaming skills from an optimal path planner. Our model car practices reaching random target locations in a small room with obstacles, by following the optimal trajectory and executing the steering actions decided by a planner. We learn the associations of driving behaviours with depth images, instead of raw color images of the visual scene. This more universal spatial representation allows the learned driving skills to transfer immediately to novel environments with different visual appearances. Our model car trained in a simple room, void of many visual features, demonstrates surprisingly good driving performance in a cluttered office environment, avoiding collisions with novel obstacles and unseen layouts of drive-able space. Its performance on outdoor curbside driving is also on par with human driving.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge