Learning to Learn in Simulation

Paper and Code

Feb 05, 2019

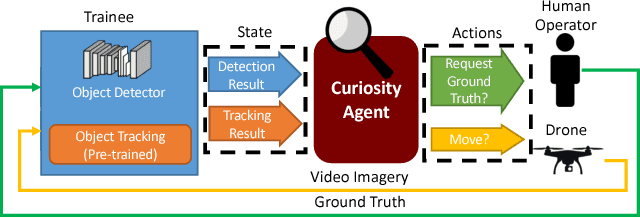

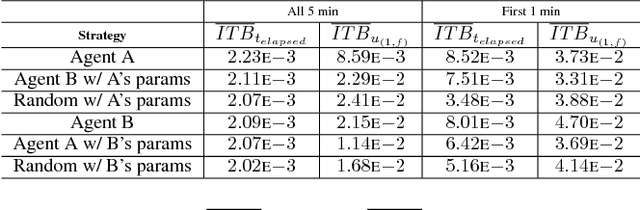

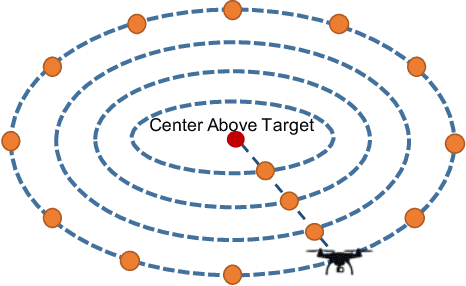

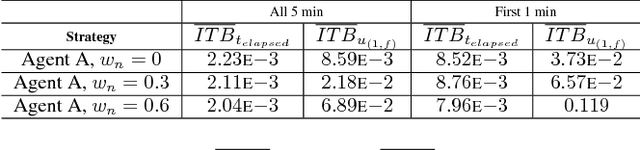

Deep learning often requires the manual collection and annotation of a training set. On robotic platforms, can we partially automate this task by training the robot to be curious, i.e., to seek out beneficial training information in the environment? In this work, we address the problem of curiosity as it relates to online, real-time, human-in-the-loop training of an object detection algorithm onboard a drone, where motion is constrained to two dimensions. We use a 3D simulation environment and deep reinforcement learning to train a curiosity agent to, in turn, train the object detection model. This agent could have one of two conflicting objectives: train as quickly as possible, or train with minimal human input. We outline a reward function that allows the curiosity agent to learn either of these objectives, while taking into account some of the physical characteristics of the drone platform on which it is meant to run. In addition, We show that we can weigh the importance of achieving these objectives by adjusting a parameter in the reward function.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge