Learning to Generate Noise for Robustness against Multiple Perturbations

Paper and Code

Jun 22, 2020

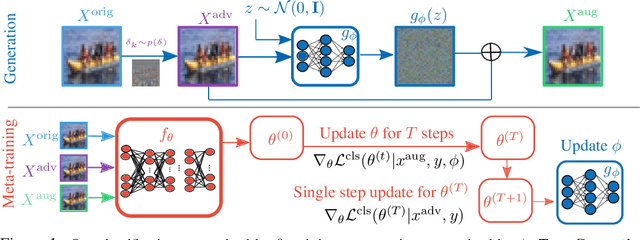

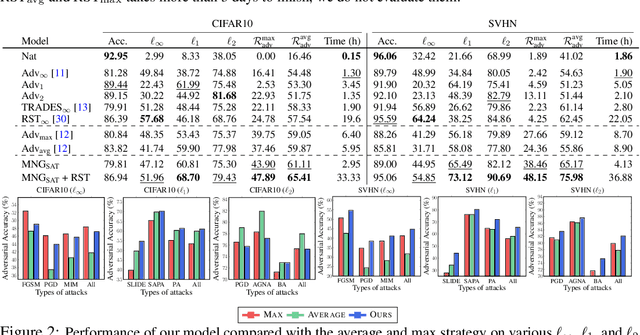

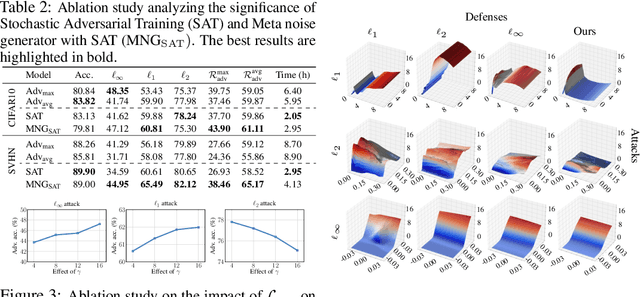

Adversarial learning has emerged as one of the successful techniques to circumvent the susceptibility of existing methods against adversarial perturbations. However, the majority of existing defense methods are tailored to defend against a single category of adversarial perturbation (e.g. $\ell_\infty$-attack). In safety-critical applications, this makes these methods extraneous as the attacker can adopt diverse adversaries to deceive the system. To tackle this challenge of robustness against multiple perturbations, we propose a novel meta-learning framework that explicitly learns to generate noise to improve the model's robustness against multiple types of attacks. Specifically, we propose Meta Noise Generator (MNG) that outputs optimal noise to stochastically perturb a given sample, such that it helps lower the error on diverse adversarial perturbations. However, training on multiple perturbations simultaneously significantly increases the computational overhead during training. To address this issue, we train our MNG while randomly sampling an attack at each epoch, which incurs negligible overhead over standard adversarial training. We validate the robustness of our framework on various datasets and against a wide variety of unseen perturbations, demonstrating that it significantly outperforms the relevant baselines across multiple perturbations with marginal computational cost compared to the multiple perturbations training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge