Learning Imbalanced Datasets with Maximum Margin Loss

Paper and Code

Jun 11, 2022

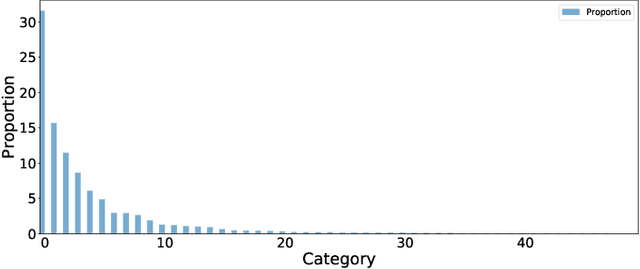

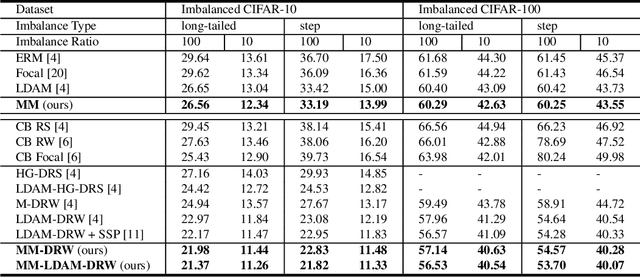

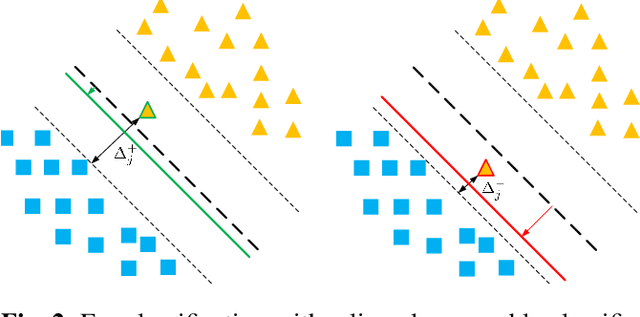

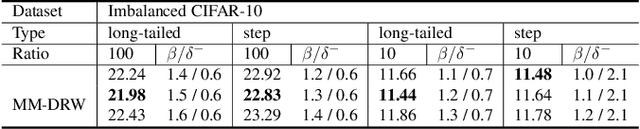

A learning algorithm referred to as Maximum Margin (MM) is proposed for considering the class-imbalance data learning issue: the trained model tends to predict the majority of classes rather than the minority ones. That is, underfitting for minority classes seems to be one of the challenges of generalization. For a good generalization of the minority classes, we design a new Maximum Margin (MM) loss function, motivated by minimizing a margin-based generalization bound through the shifting decision bound. The theoretically-principled label-distribution-aware margin (LDAM) loss was successfully applied with prior strategies such as re-weighting or re-sampling along with the effective training schedule. However, they did not investigate the maximum margin loss function yet. In this study, we investigate the performances of two types of hard maximum margin-based decision boundary shift with LDAM's training schedule on artificially imbalanced CIFAR-10/100 for fair comparisons and effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge