Learning How to Trade-Off Safety with Agility Using Deep Covariance Estimation for Perception Driven UAV Motion Planning

Paper and Code

Dec 11, 2020

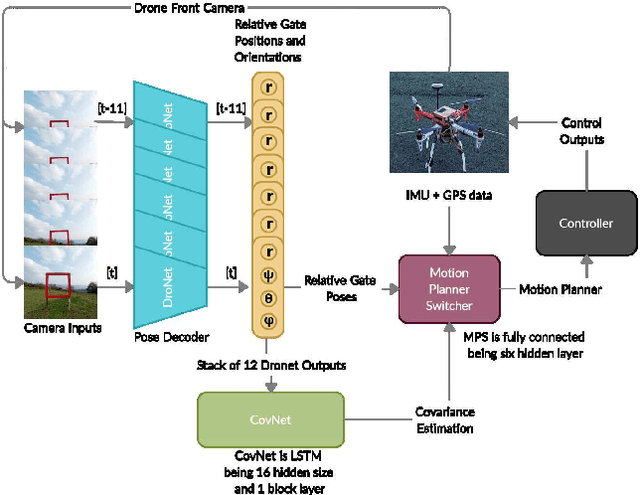

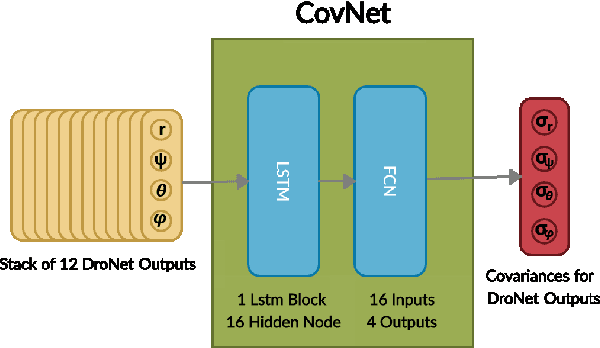

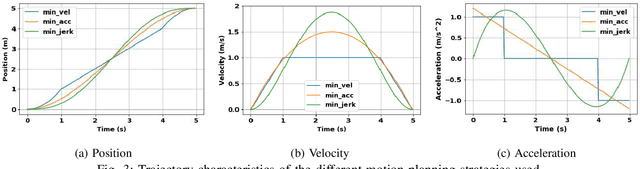

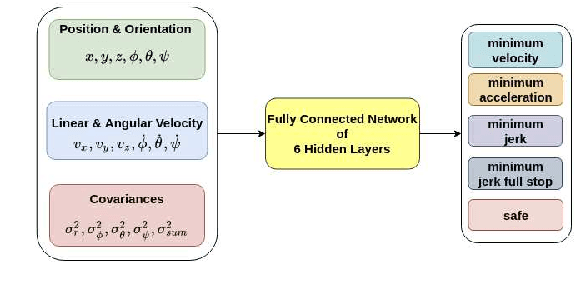

We investigate how to utilize predictive models for selecting appropriate motion planning strategies based on perception uncertainty estimation for agile unmanned aerial vehicle (UAV) navigation tasks. Although there are variety of motion planning and perception algorithms for such tasks, the impact of perception uncertainty is not explicitly handled in many of the current motion algorithms, which leads to performance loss in real-life scenarios where the measurement are often noisy due to external disturbances. We develop a novel framework for embedding perception uncertainty to high level motion planning management, in order to select the best available motion planning approach for the currently estimated perception uncertainty. We estimate the uncertainty in visual inputs using a deep neural network (CovNet) that explicitly predicts the covariance of the current measurements. Next, we train a high level machine learning model for predicting the lowest cost motion planning algorithm given the current estimate of covariance as well as the UAV states. We demonstrate on both real-life data and drone racing simulations that our approach, named uncertainty driven motion planning switcher (UDS) yields the safest and fastest trajectories among compared alternatives. Furthermore, we show that the developed approach learns how to trade-off safety with agility by switching to motion planners that leads to more agile trajectories when the estimated covariance is high and vice versa.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge