Learning from Indirect Observations

Paper and Code

Oct 10, 2019

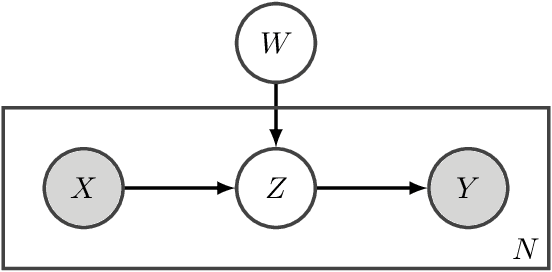

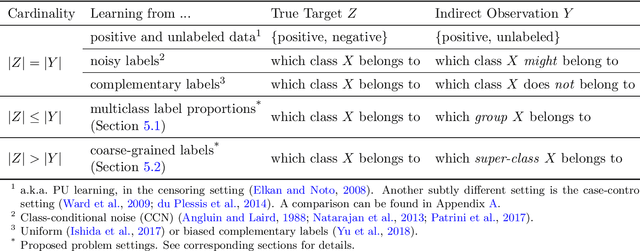

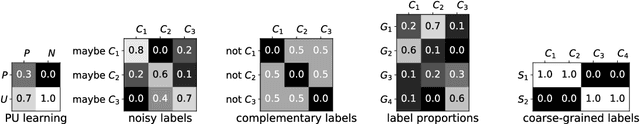

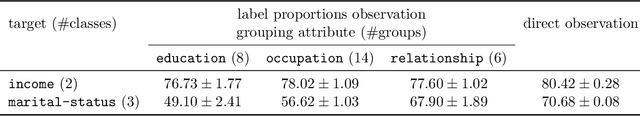

Weakly-supervised learning is a paradigm for alleviating the scarcity of labeled data by leveraging lower-quality but larger-scale supervision signals. While existing work mainly focuses on utilizing a certain type of weak supervision, we present a probabilistic framework, learning from indirect observations, for learning from a wide range of weak supervision in real-world problems, e.g., noisy labels, complementary labels and coarse-grained labels. We propose a general method based on the maximum likelihood principle, which has desirable theoretical properties and can be straightforwardly implemented for deep neural networks. Concretely, a discriminative model for the true target is used for modeling the indirect observation, which is a random variable entirely depending on the true target stochastically or deterministically. Then, maximizing the likelihood given indirect observations leads to an estimator of the true target implicitly. Comprehensive experiments for two novel problem settings --- learning from multiclass label proportions and learning from coarse-grained labels, illustrate practical usefulness of our method and demonstrate how to integrate various sources of weak supervision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge