Learning From Context-Agnostic Synthetic Data

Paper and Code

May 29, 2020

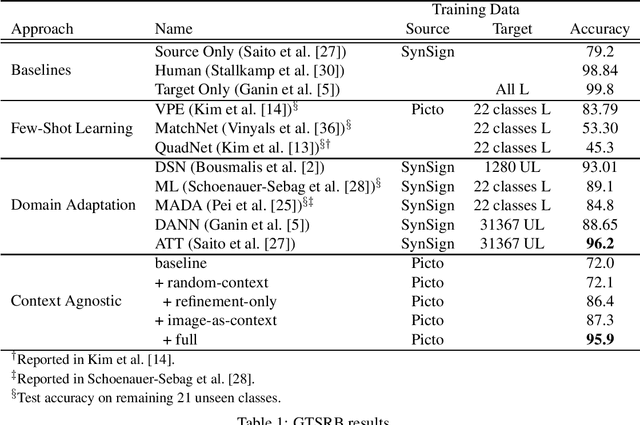

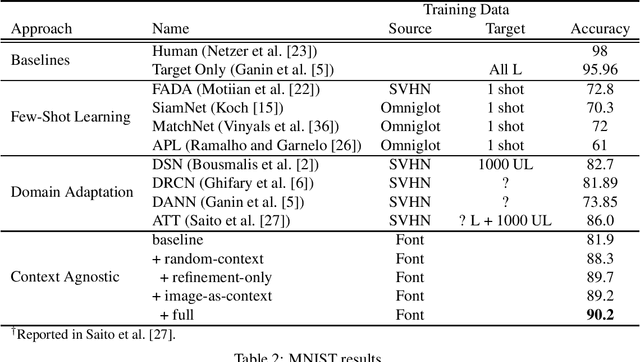

We present a new approach for synthesizing training data given only a single example of each class. Rather than learn over a large but fixed dataset of examples, we generate our entire training set using only the synthetic examples provided. The goal is to learn a classifier that generalizes to a non-synthetic domain without pretraining or fine-tuning on any real world data. We evaluate our approach by training neural networks for two standard benchmarks for real-world image classification: on the GTSRB traffic sign recognition benchmark, we achieve 96% test accuracy using only one clean example of each sign on a blank background; on the MNIST handwritten digit benchmark, we achieve 90% test accuracy using a single example of each digit taken from a computer font. Both these results are competitive with state-of-the-art results from the few-shot learning and domain transfer literature, while using significantly less data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge