Learning Correction Errors via Frequency-Self Attention for Blind Image Super-Resolution

Paper and Code

Mar 12, 2024

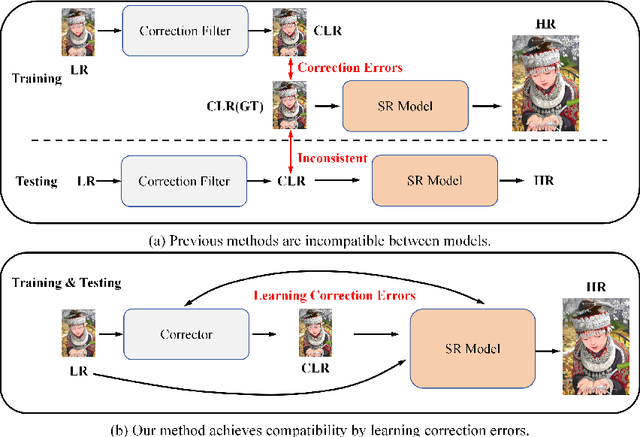

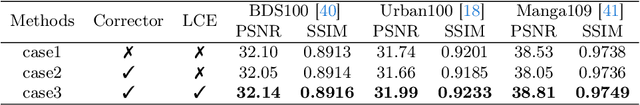

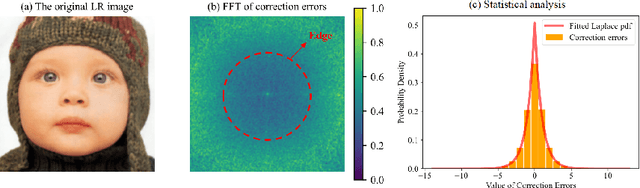

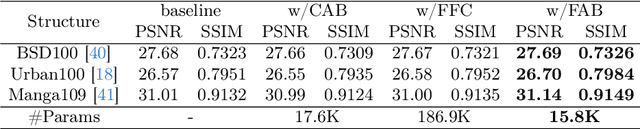

Previous approaches for blind image super-resolution (SR) have relied on degradation estimation to restore high-resolution (HR) images from their low-resolution (LR) counterparts. However, accurate degradation estimation poses significant challenges. The SR model's incompatibility with degradation estimation methods, particularly the Correction Filter, may significantly impair performance as a result of correction errors. In this paper, we introduce a novel blind SR approach that focuses on Learning Correction Errors (LCE). Our method employs a lightweight Corrector to obtain a corrected low-resolution (CLR) image. Subsequently, within an SR network, we jointly optimize SR performance by utilizing both the original LR image and the frequency learning of the CLR image. Additionally, we propose a new Frequency-Self Attention block (FSAB) that enhances the global information utilization ability of Transformer. This block integrates both self-attention and frequency spatial attention mechanisms. Extensive ablation and comparison experiments conducted across various settings demonstrate the superiority of our method in terms of visual quality and accuracy. Our approach effectively addresses the challenges associated with degradation estimation and correction errors, paving the way for more accurate blind image SR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge