Learning Causal Models of Autonomous Agents using Interventions

Paper and Code

Aug 21, 2021

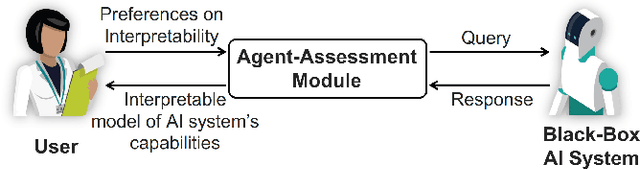

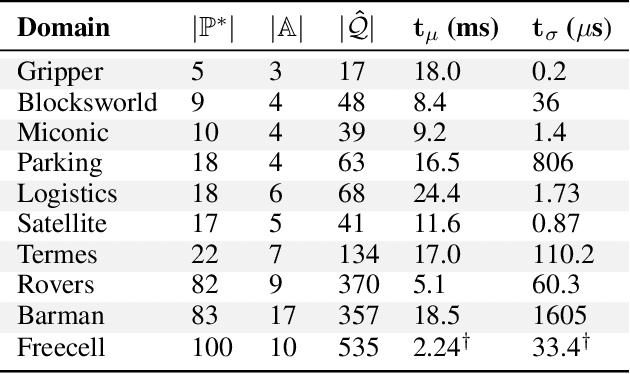

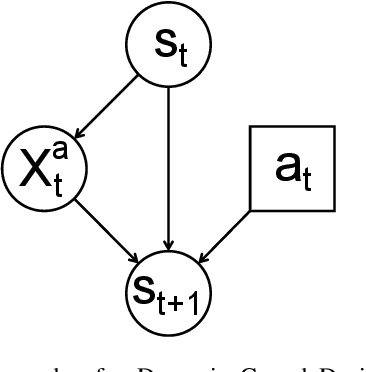

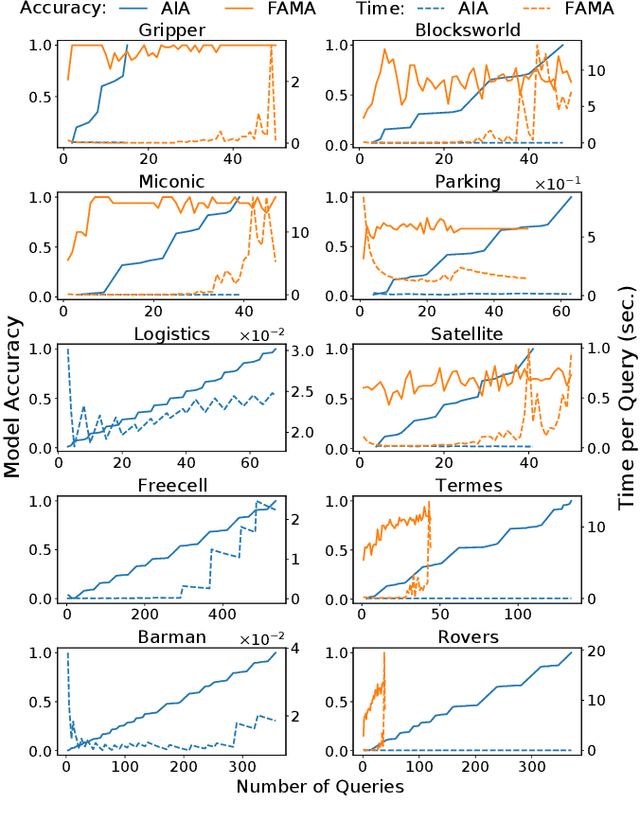

One of the several obstacles in the widespread use of AI systems is the lack of requirements of interpretability that can enable a layperson to ensure the safe and reliable behavior of such systems. We extend the analysis of an agent assessment module that lets an AI system execute high-level instruction sequences in simulators and answer the user queries about its execution of sequences of actions. We show that such a primitive query-response capability is sufficient to efficiently derive a user-interpretable causal model of the system in stationary, fully observable, and deterministic settings. We also introduce dynamic causal decision networks (DCDNs) that capture the causal structure of STRIPS-like domains. A comparative analysis of different classes of queries is also presented in terms of the computational requirements needed to answer them and the efforts required to evaluate their responses to learn the correct model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge