Learning and Testing Junta Distributions with Subcube Conditioning

Paper and Code

Apr 26, 2020

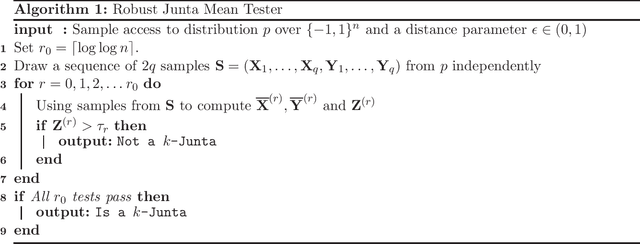

We study the problems of learning and testing junta distributions on $\{-1,1\}^n$ with respect to the uniform distribution, where a distribution $p$ is a $k$-junta if its probability mass function $p(x)$ depends on a subset of at most $k$ variables. The main contribution is an algorithm for finding relevant coordinates in a $k$-junta distribution with subcube conditioning [BC18, CCKLW20]. We give two applications: 1. An algorithm for learning $k$-junta distributions with $\tilde{O}(k/\epsilon^2) \log n + O(2^k/\epsilon^2)$ subcube conditioning queries, and 2. An algorithm for testing $k$-junta distributions with $\tilde{O}((k + \sqrt{n})/\epsilon^2)$ subcube conditioning queries. All our algorithms are optimal up to poly-logarithmic factors. Our results show that subcube conditioning, as a natural model for accessing high-dimensional distributions, enables significant savings in learning and testing junta distributions compared to the standard sampling model. This addresses an open question posed by Aliakbarpour, Blais, and Rubinfeld [ABR17].

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge