Learned Variable-Rate Image Compression with Residual Divisive Normalization

Paper and Code

Dec 11, 2019

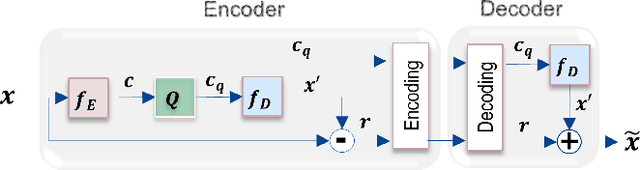

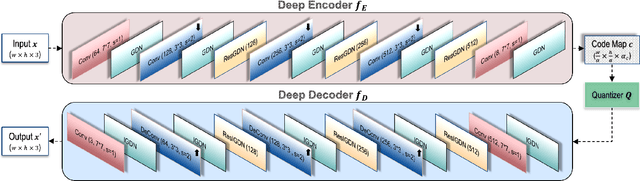

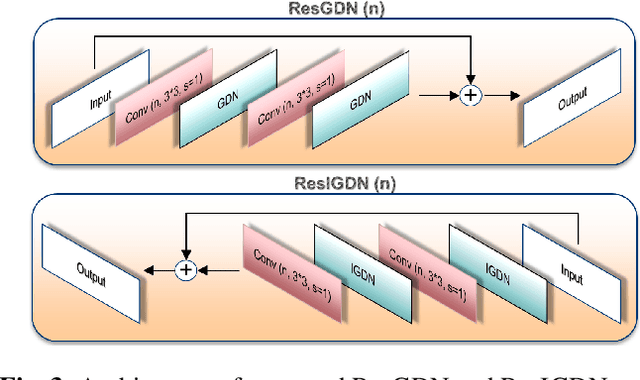

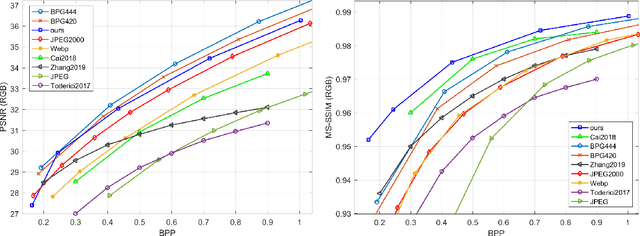

Recently it has been shown that deep learning-based image compression has shown the potential to outperform traditional codecs. However, most existing methods train multiple networks for multiple bit rates, which increases the implementation complexity. In this paper, we propose a variable-rate image compression framework, which employs more Generalized Divisive Normalization (GDN) layers than previous GDN-based methods. Novel GDN-based residual sub-networks are also developed in the encoder and decoder networks. Our scheme also uses a stochastic rounding-based scalable quantization. To further improve the performance, we encode the residual between the input and the reconstructed image from the decoder network as an enhancement layer. To enable a single model to operate with different bit rates and to learn multi-rate image features, a new objective function is introduced. Experimental results show that the proposed framework trained with variable-rate objective function outperforms all standard codecs such as H.265/HEVC-based BPG and state-of-the-art learning-based variable-rate methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge