Learned Finite-Time Consensus for Distributed Optimization

Paper and Code

Apr 10, 2024

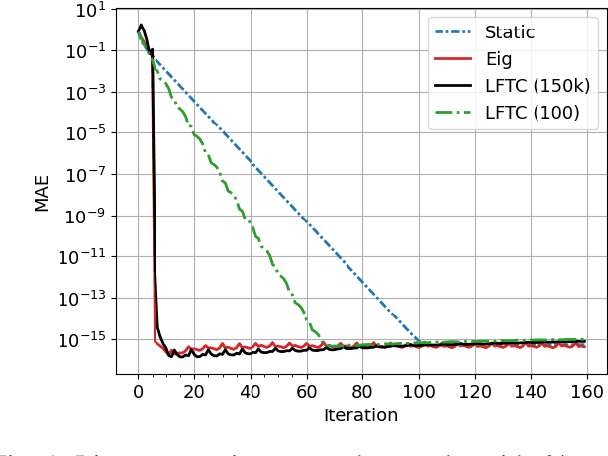

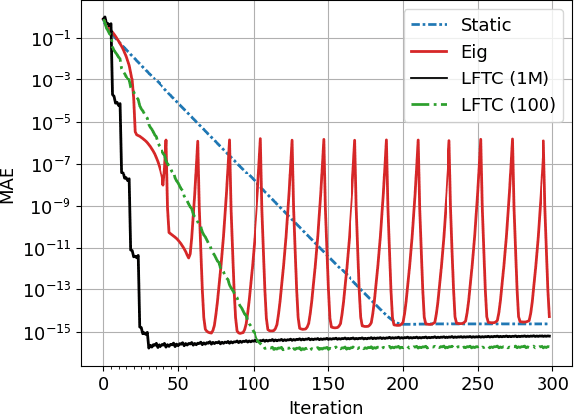

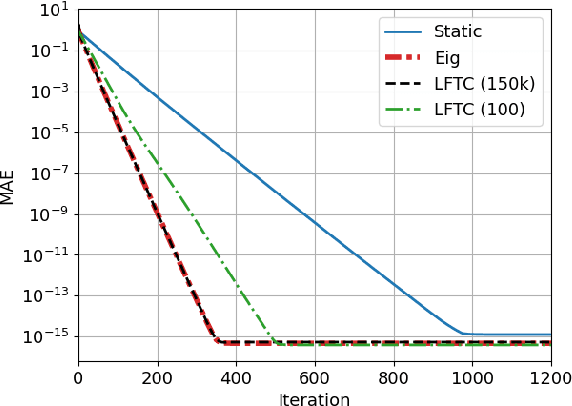

Most algorithms for decentralized learning employ a consensus or diffusion mechanism to drive agents to a common solution of a global optimization problem. Generally this takes the form of linear averaging, at a rate of contraction determined by the mixing rate of the underlying network topology. For very sparse graphs this can yield a bottleneck, slowing down the convergence of the learning algorithm. We show that a sequence of matrices achieving finite-time consensus can be learned for unknown graph topologies in a decentralized manner by solving a constrained matrix factorization problem. We demonstrate numerically the benefit of the resulting scheme in both structured and unstructured graphs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge