Learn Electronic Health Records by Fully Decentralized Federated Learning

Paper and Code

Dec 10, 2019

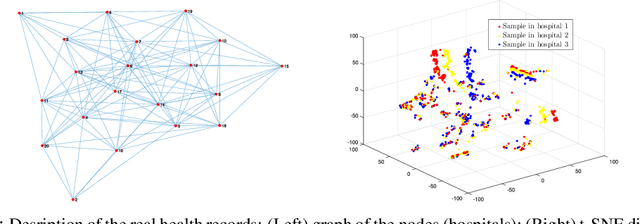

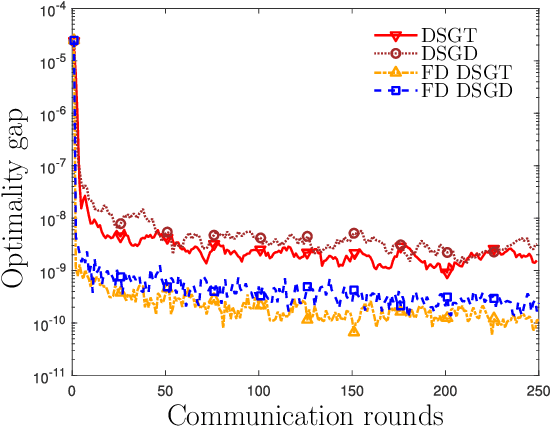

Federated learning opens a number of research opportunities due to its high communication efficiency in distributed training problems within a star network. In this paper, we focus on improving the communication efficiency for fully decentralized federated learning over a graph, where the algorithm performs local updates for several iterations and then enables communications among the nodes. In such a way, the communication rounds of exchanging the common interest of parameters can be saved significantly without loss of optimality of the solutions. Multiple numerical simulations based on large, real-world electronic health record databases showcase the superiority of the decentralized federated learning compared with classic methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge