LDNet: End-to-End Lane Detection Approach usinga Dynamic Vision Sensor

Paper and Code

Sep 17, 2020

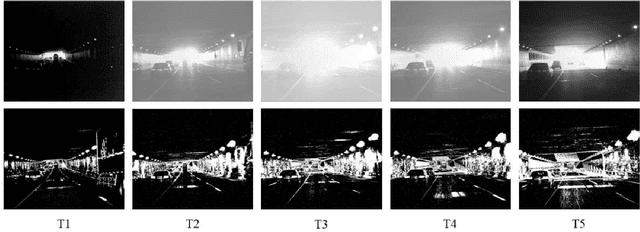

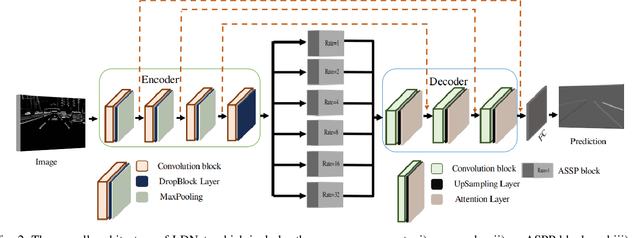

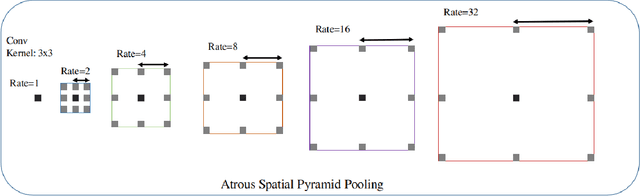

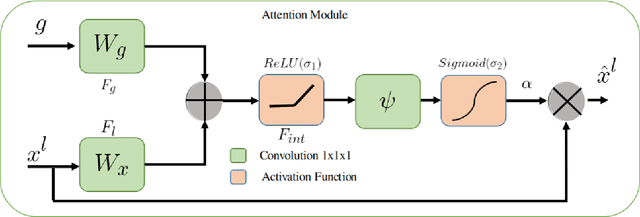

Modern vehicles are equipped with various driver-assistance systems, including automatic lane keeping, which prevents unintended lane departures. Traditional lane detection methods incorporate handcrafted or deep learning-based features followed by postprocessing techniques for lane extraction using RGB cameras. The utilization of a RGB camera for lane detection tasks is prone to illumination variations, sun glare, and motion blur, which limits the performance of the lane detection method. The incorporation of an event camera for lane detection tasks in the perception stack of autonomous driving is one of the most promising solutions for mitigating challenges encountered by RGB cameras. In this work, Lane Detection using dynamic vision sensor (LDNet), is proposed, that is designed in an encoder-decoder manner with an atrous spatial pyramid pooling block followed by an attention-guided decoder for predicting and reducing false predictions in lane detection tasks. This decoder eliminates the implicit need for a postprocessing step. The experimental results show the significant improvement of $5.54\%$ and $5.03\%$ on the $F1$ scores in the multiclass and binary class lane detection tasks, respectively. Additionally, the $IoU$ scores of the proposed method surpass those of the best-performing state-of-the-art method by $6.50\%$ and $9.37\%$ in the multiclass and binary class tasks, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge