Layerwise Noise Maximisation to Train Low-Energy Deep Neural Networks

Paper and Code

Dec 23, 2019

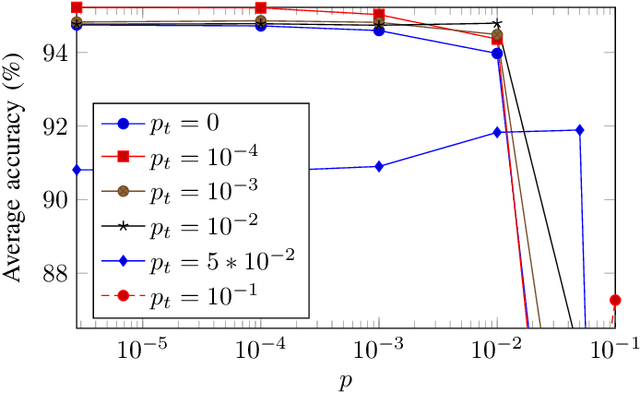

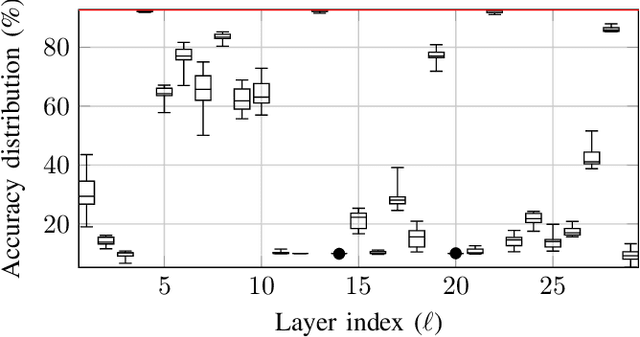

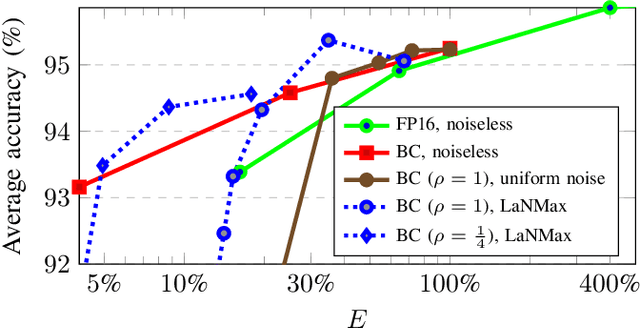

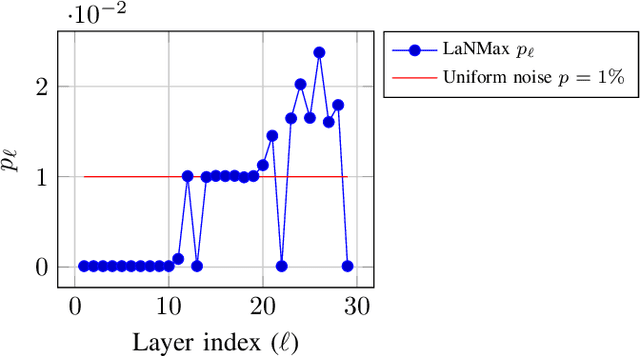

Deep neural networks (DNNs) depend on the storage of a large number of parameters, which consumes an important portion of the energy used during inference. This paper considers the case where the energy usage of memory elements can be reduced at the cost of reduced reliability. A training algorithm is proposed to optimize the reliability of the storage separately for each layer of the network, while incurring a negligible complexity overhead compared to a conventional stochastic gradient descent training. For an exponential energy-reliability model, the proposed training approach can decrease the memory energy consumption of a DNN with binary parameters by 3.3$\times$ at isoaccuracy, compared to a reliable implementation.

* To be presented at AICAS 2020

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge