Latency Aware Semi-synchronous Client Selection and Model Aggregation for Wireless Federated Learning

Paper and Code

Oct 19, 2022

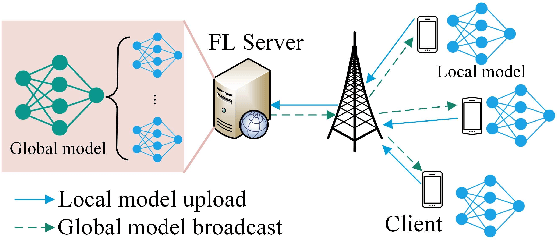

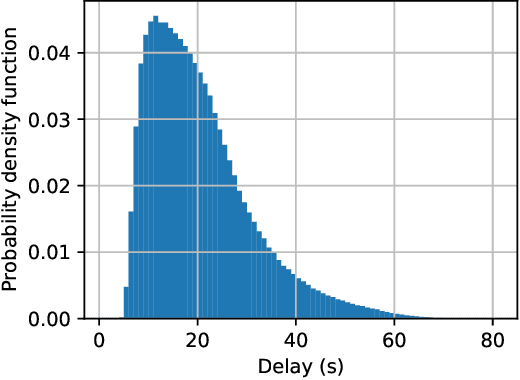

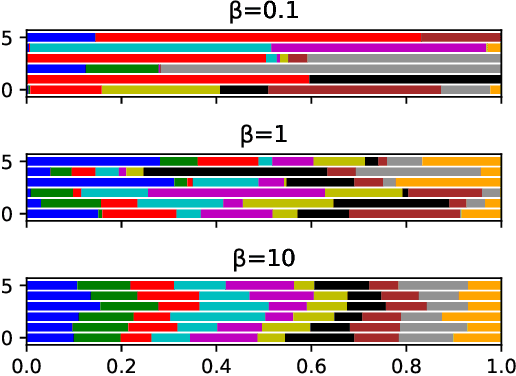

Federated learning (FL) is a collaborative machine learning framework that requires different clients (e.g., Internet of Things devices) to participate in the machine learning model training process by training and uploading their local models to an FL server in each global iteration. Upon receiving the local models from all the clients, the FL server generates a global model by aggregating the received local models. This traditional FL process may suffer from the straggler problem in heterogeneous client settings, where the FL server has to wait for slow clients to upload their local models in each global iteration, thus increasing the overall training time. One of the solutions is to set up a deadline and only the clients that can upload their local models before the deadline would be selected in the FL process. This solution may lead to a slow convergence rate and global model overfitting issues due to the limited client selection. In this paper, we propose the Latency awarE Semi-synchronous client Selection and mOdel aggregation for federated learNing (LESSON) method that allows all the clients to participate in the whole FL process but with different frequencies. That is, faster clients would be scheduled to upload their models more frequently than slow clients, thus resolving the straggler problem and accelerating the convergence speed, while avoiding model overfitting. Also, LESSON is capable of adjusting the tradeoff between the model accuracy and convergence rate by varying the deadline. Extensive simulations have been conducted to compare the performance of LESSON with the other two baseline methods, i.e., FedAvg and FedCS. The simulation results demonstrate that LESSON achieves faster convergence speed than FedAvg and FedCS, and higher model accuracy than FedCS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge