Lane Detection with Versatile AtrousFormer and Local Semantic Guidance

Paper and Code

Mar 08, 2022

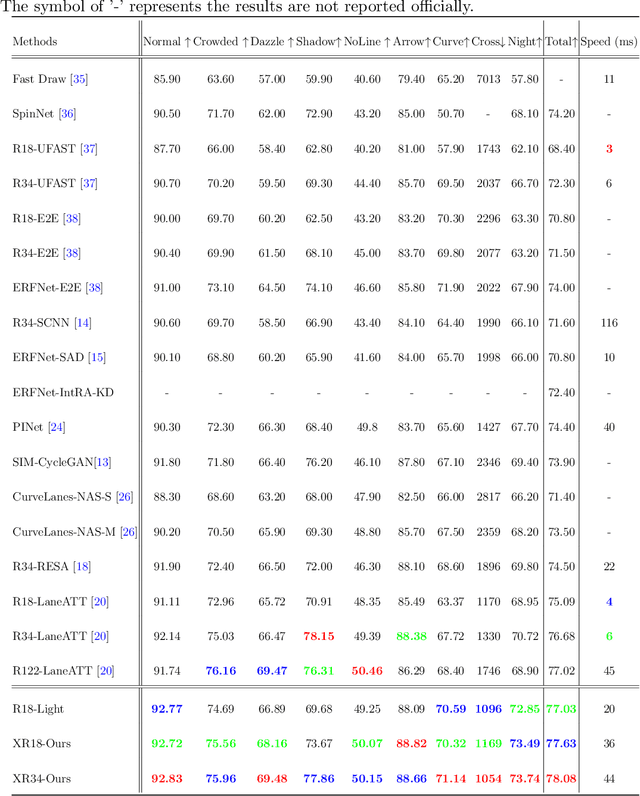

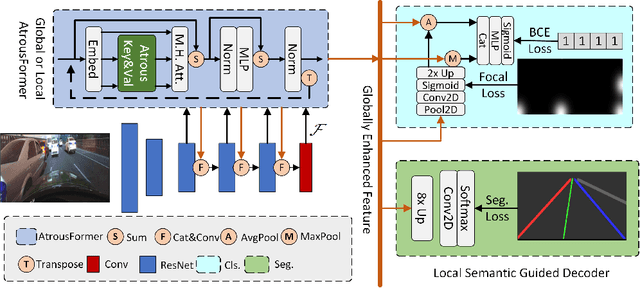

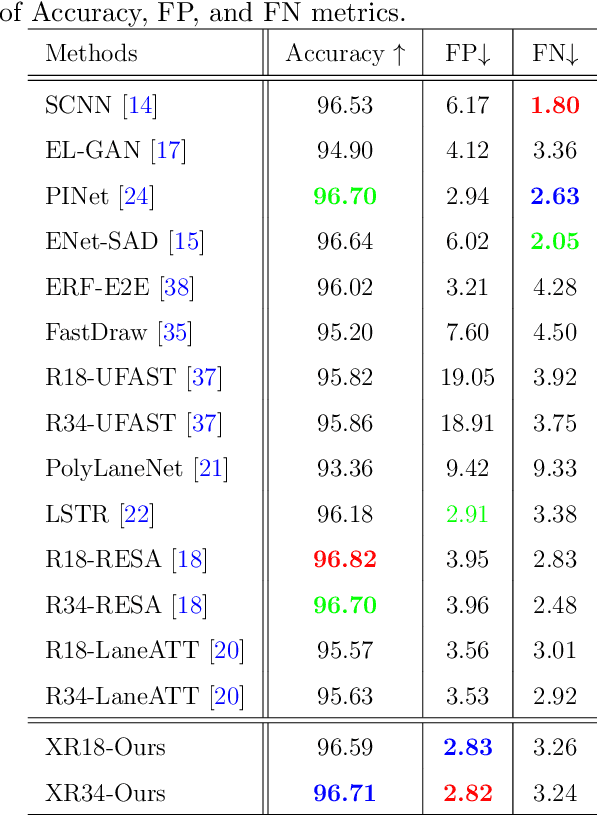

Lane detection is one of the core functions in autonomous driving and has aroused widespread attention recently. The networks to segment lane instances, especially with bad appearance, must be able to explore lane distribution properties. Most existing methods tend to resort to CNN-based techniques. A few have a try on incorporating the recent adorable, the seq2seq Transformer \cite{transformer}. However, their innate drawbacks of weak global information collection ability and exorbitant computation overhead prohibit a wide range of the further applications. In this work, we propose Atrous Transformer (AtrousFormer) to solve the problem. Its variant local AtrousFormer is interleaved into feature extractor to enhance extraction. Their collecting information first by rows and then by columns in a dedicated manner finally equips our network with stronger information gleaning ability and better computation efficiency. To further improve the performance, we also propose a local semantic guided decoder to delineate the identities and shapes of lanes more accurately, in which the predicted Gaussian map of the starting point of each lane serves to guide the process. Extensive results on three challenging benchmarks (CULane, TuSimple, and BDD100K) show that our network performs favorably against the state of the arts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge