Knowledge Transfer for Cross-Domain Reinforcement Learning: A Systematic Review

Paper and Code

Apr 26, 2024

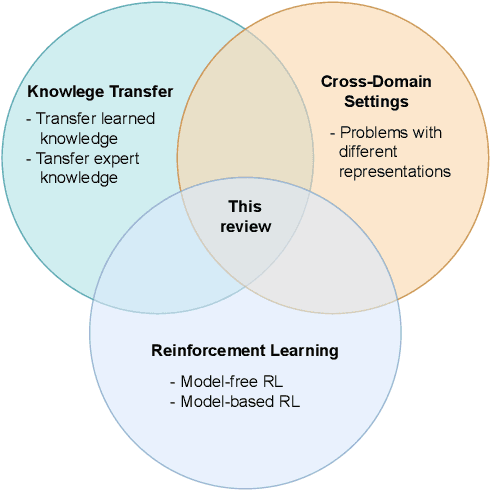

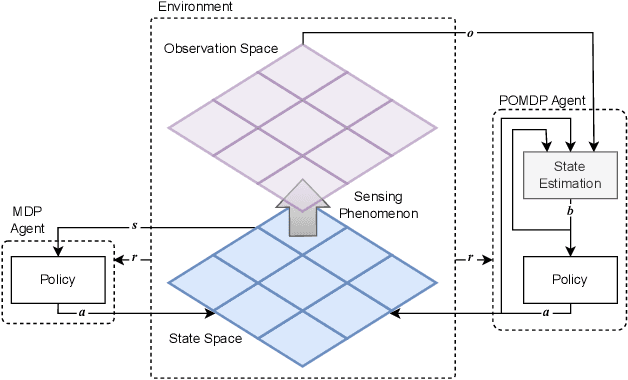

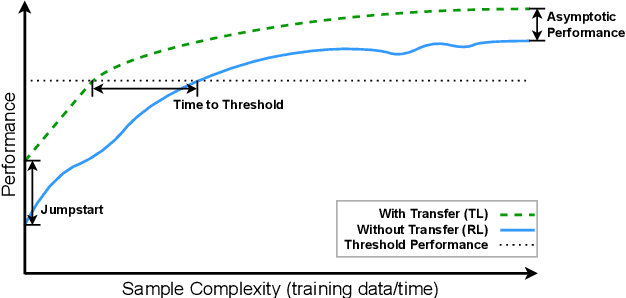

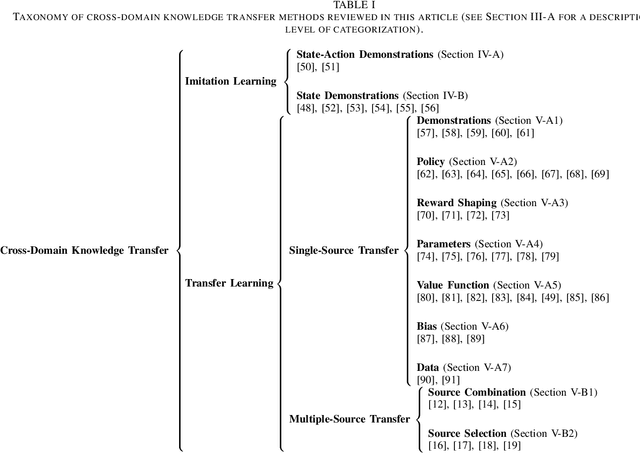

Reinforcement Learning (RL) provides a framework in which agents can be trained, via trial and error, to solve complex decision-making problems. Learning with little supervision causes RL methods to require large amounts of data, which renders them too expensive for many applications (e.g. robotics). By reusing knowledge from a different task, knowledge transfer methods present an alternative to reduce the training time in RL. Given how severe data scarcity can be, there has been a growing interest for methods capable of transferring knowledge across different domains (i.e. problems with different representation) due to the flexibility they offer. This review presents a unifying analysis of methods focused on transferring knowledge across different domains. Through a taxonomy based on a transfer-approach categorization, and a characterization of works based on their data-assumption requirements, the objectives of this article are to 1) provide a comprehensive and systematic revision of knowledge transfer methods for the cross-domain RL setting, 2) categorize and characterize these methods to provide an analysis based on relevant features such as their transfer approach and data requirements, and 3) discuss the main challenges regarding cross-domain knowledge transfer, as well as ideas of future directions worth exploring to address these problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge