Knowledge Distillation Applied to Optical Channel Equalization: Solving the Parallelization Problem of Recurrent Connection

Paper and Code

Dec 08, 2022

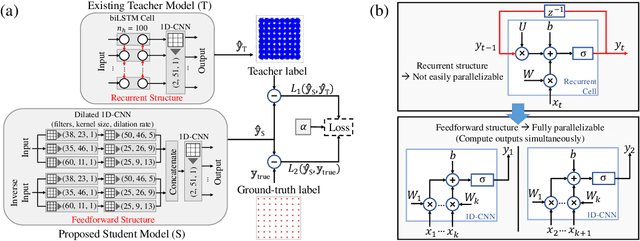

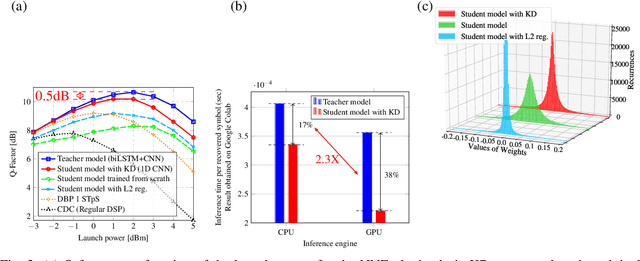

To circumvent the non-parallelizability of recurrent neural network-based equalizers, we propose knowledge distillation to recast the RNN into a parallelizable feedforward structure. The latter shows 38\% latency decrease, while impacting the Q-factor by only 0.5dB.

* Paper Accepted for Oral presentation - OFC 2023 (Optical Fiber

Communication Conference)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge