Kinematically consistent recurrent neural networks for learning inverse problems in wave propagation

Paper and Code

Oct 08, 2021

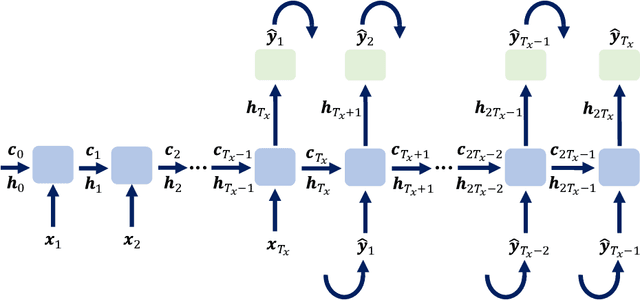

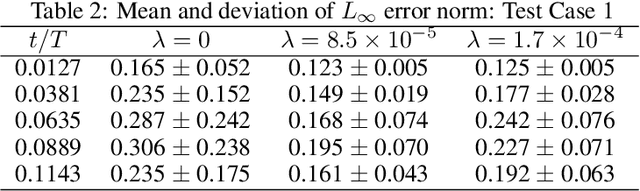

Although machine learning (ML) is increasingly employed recently for mechanistic problems, the black-box nature of conventional ML architectures lacks the physical knowledge to infer unforeseen input conditions. This implies both severe overfitting during a dearth of training data and inadequate physical interpretability, which motivates us to propose a new kinematically consistent, physics-based ML model. In particular, we attempt to perform physically interpretable learning of inverse problems in wave propagation without suffering overfitting restrictions. Towards this goal, we employ long short-term memory (LSTM) networks endowed with a physical, hyperparameter-driven regularizer, performing penalty-based enforcement of the characteristic geometries. Since these characteristics are the kinematical invariances of wave propagation phenomena, maintaining their structure provides kinematical consistency to the network. Even with modest training data, the kinematically consistent network can reduce the $L_1$ and $L_\infty$ error norms of the plain LSTM predictions by about 45% and 55%, respectively. It can also increase the horizon of the plain LSTM's forecasting by almost two times. To achieve this, an optimal range of the physical hyperparameter, analogous to an artificial bulk modulus, has been established through numerical experiments. The efficacy of the proposed method in alleviating overfitting, and the physical interpretability of the learning mechanism, are also discussed. Such an application of kinematically consistent LSTM networks for wave propagation learning is presented here for the first time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge