Kinematic Morphing Networks for Manipulation Skill Transfer

Paper and Code

Mar 05, 2018

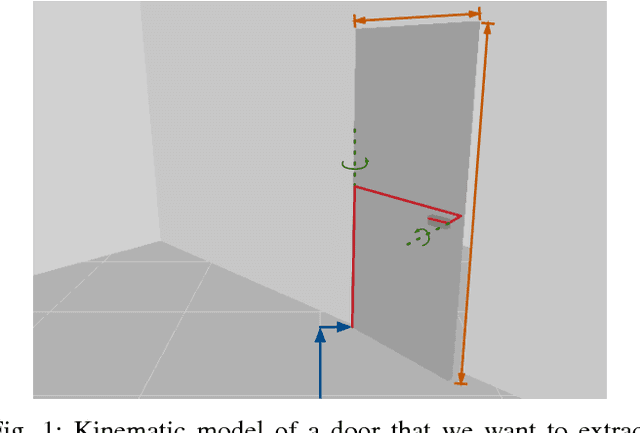

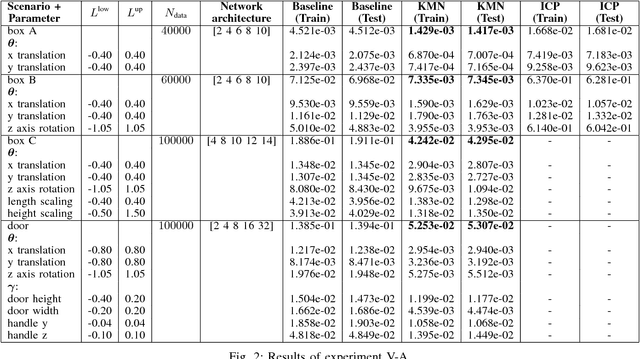

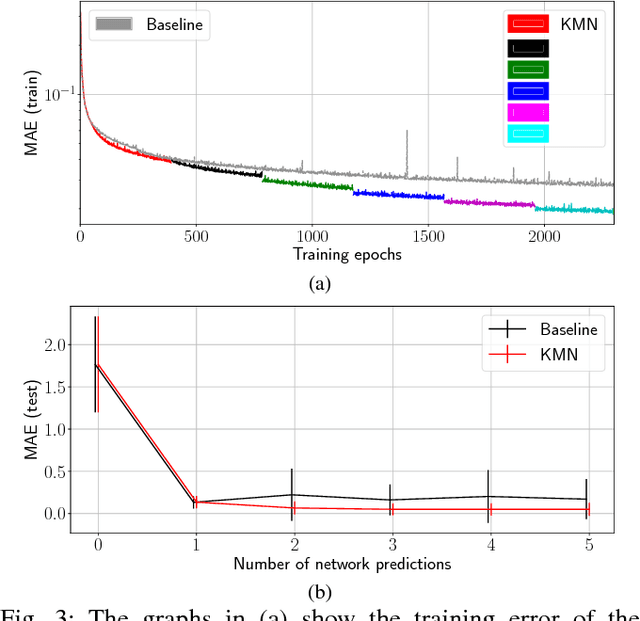

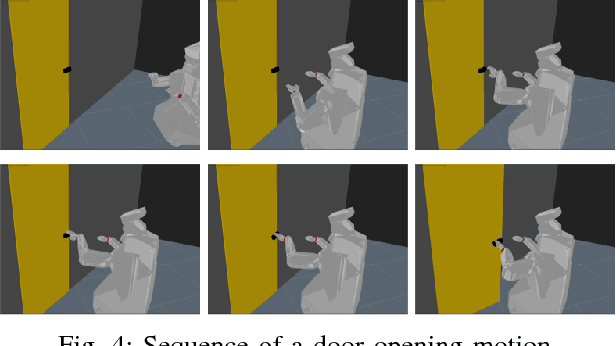

The transfer of a robot skill between different geometric environments is non-trivial since a wide variety of environments exists, sensor observations as well as robot motions are high-dimensional, and the environment might only be partially observed. We consider the problem of extracting a low-dimensional description of the manipulated environment in form of a kinematic model. This allows us to transfer a skill by defining a policy on a prototype model and morphing the observed environment to this prototype. A deep neural network is used to map depth image observations of the environment to morphing parameter, which include transformation and configuration parameters of the prototype model. Using the concatenation property of affine transformations and the ability to convert point clouds to depth images allows to apply the network in an iterative manner. The network is trained on data generated in a simulator and on augmented data that is created by using network predictions. The algorithm is evaluated on different tasks, where it is shown that iterative predictions lead to a higher accuracy than one-step predictions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge