Kernel Normalized Convolutional Networks for Privacy-Preserving Machine Learning

Paper and Code

Sep 30, 2022

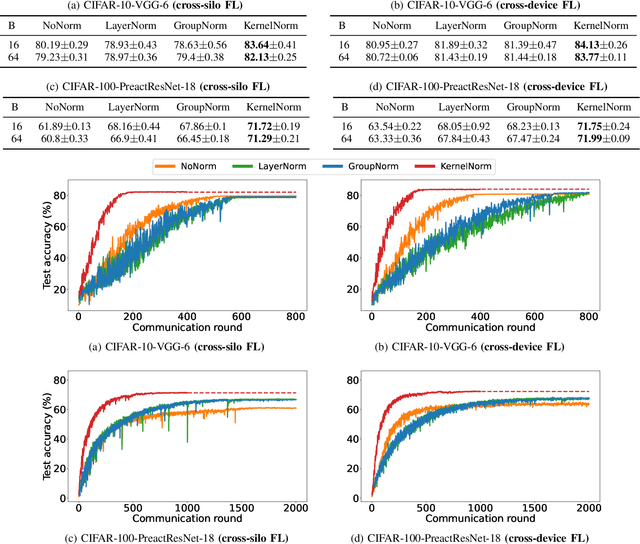

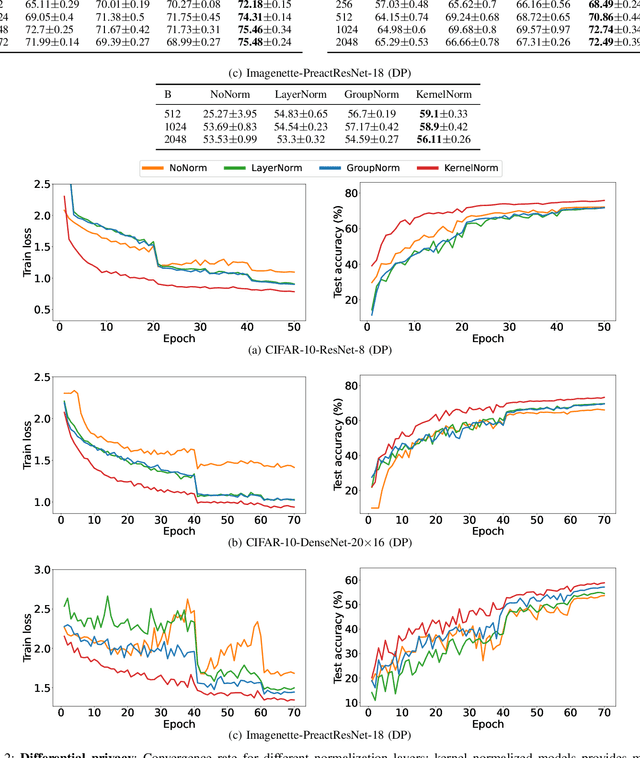

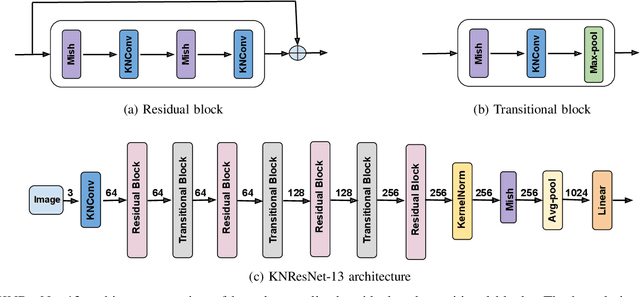

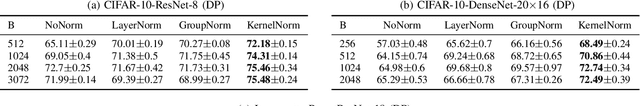

Normalization is an important but understudied challenge in privacy-related application domains such as federated learning (FL) and differential privacy (DP). While the unsuitability of batch normalization for FL and DP has already been shown, the impact of the other normalization methods on the performance of federated or differentially private models is not well-known. To address this, we draw a performance comparison among layer normalization (LayerNorm), group normalization (GroupNorm), and the recently proposed kernel normalization (KernelNorm) in FL and DP settings. Our results indicate LayerNorm and GroupNorm provide no performance gain compared to the baseline (i.e. no normalization) for shallow models, but they considerably enhance performance of deeper models. KernelNorm, on the other hand, significantly outperforms its competitors in terms of accuracy and convergence rate (or communication efficiency) for both shallow and deeper models. Given these key observations, we propose a kernel normalized ResNet architecture called KNResNet-13 for differentially private learning environments. Using the proposed architecture, we provide new state-of-the-art accuracy values on the CIFAR-10 and Imagenette datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge