Just Noticeable Difference for Machine Perception and Generation of Regularized Adversarial Images with Minimal Perturbation

Paper and Code

Feb 16, 2021

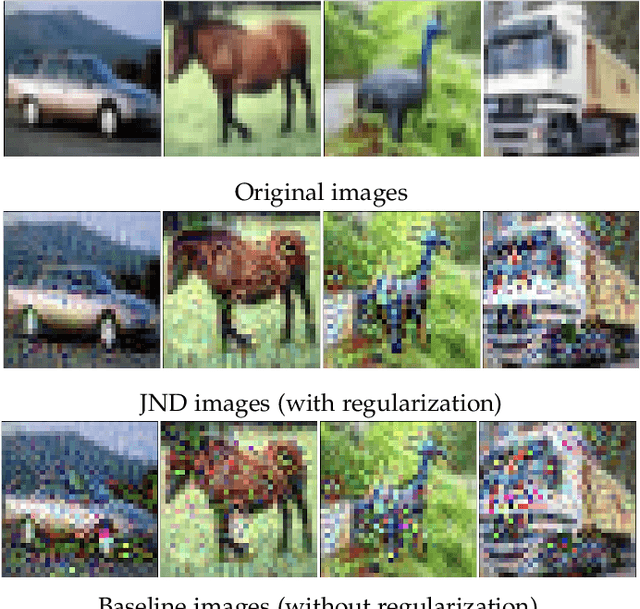

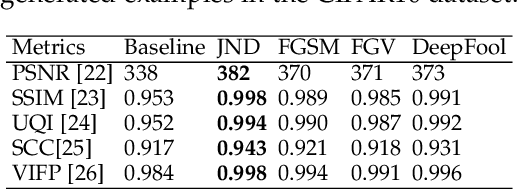

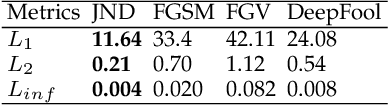

In this study, we introduce a measure for machine perception, inspired by the concept of Just Noticeable Difference (JND) of human perception. Based on this measure, we suggest an adversarial image generation algorithm, which iteratively distorts an image by an additive noise until the machine learning model detects the change in the image by outputting a false label. The amount of noise added to the original image is defined as the gradient of the cost function of the machine learning model. This cost function explicitly minimizes the amount of perturbation applied on the input image and it is regularized by bounded range and total variation functions to assure perceptual similarity of the adversarial image to the input. We evaluate the adversarial images generated by our algorithm both qualitatively and quantitatively on CIFAR10, ImageNet, and MS COCO datasets. Our experiments on image classification and object detection tasks show that adversarial images generated by our method are both more successful in deceiving the recognition/detection model and less perturbed compared to the images generated by the state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge