Just a Momentum: Analytical Study of Momentum-Based Acceleration Methods in Paradigmatic High-Dimensional Non-Convex Problems

Paper and Code

Mar 11, 2021

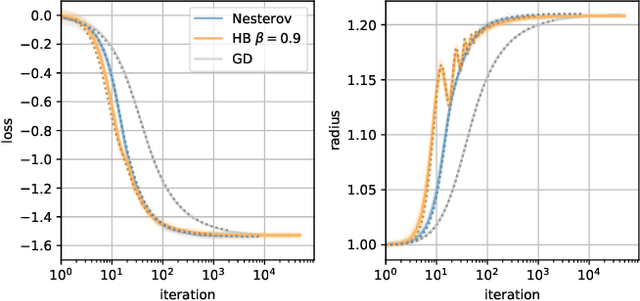

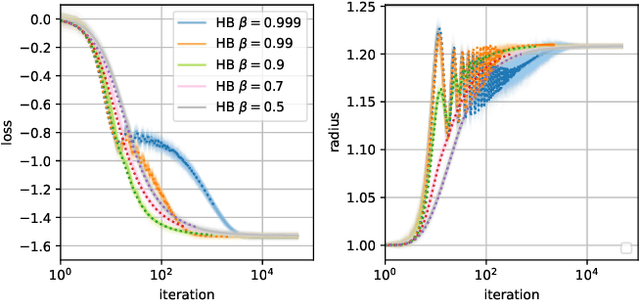

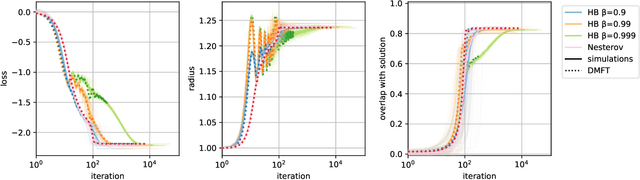

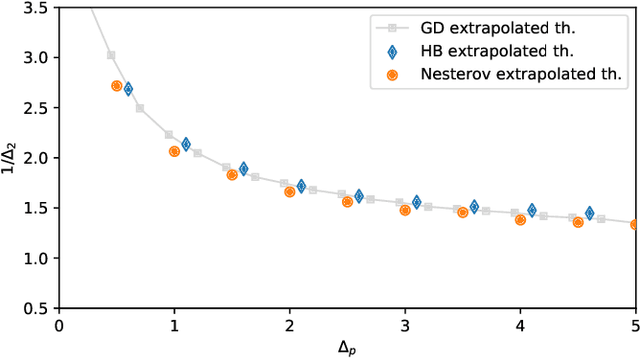

When optimizing over loss functions it is common practice to use momentum-based accelerated methods rather than vanilla gradient-based method. Despite widely applied to arbitrary loss function, their behaviour in generically non-convex, high dimensional landscapes is poorly understood. In this work we used dynamical mean field theory techniques to describe analytically the average behaviour of these methods in a prototypical non-convex model: the (spiked) matrix-tensor model. We derive a closed set of equations that describe the behaviours of several algorithms including heavy-ball momentum and Nesterov acceleration. Additionally we characterize the evolution of a mathematically equivalent physical system of massive particles relaxing toward the bottom of an energetic landscape. Under the correct mapping the two dynamics are equivalent and it can be noticed that having a large mass increases the effective time step of the heavy ball dynamics leading to a speed up.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge