Joint Normality Test Via Two-Dimensional Projection

Paper and Code

Oct 08, 2021

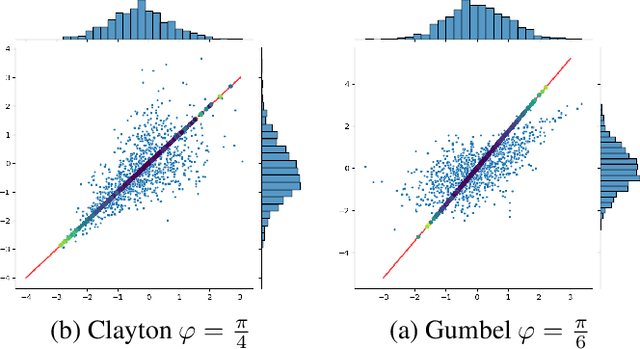

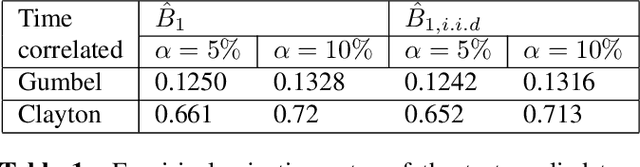

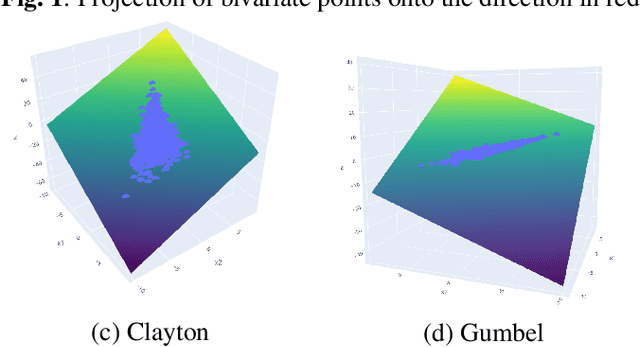

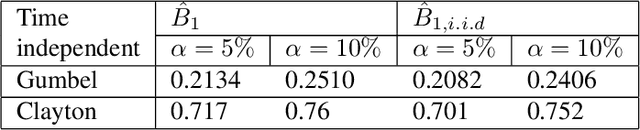

Extensive literature exists on how to test for normality, especially for identically and independently distributed (i.i.d) processes. The case of dependent samples has also been addressed, but only for scalar random processes. For this reason, we have proposed a joint normality test for multivariate time-series, extending Mardia's Kurtosis test. In the continuity of this work, we provide here an original performance study of the latter test applied to two-dimensional projections. By leveraging copula, we conduct a comparative study between the bivariate tests and their scalar counterparts. This simulation study reveals that one-dimensional random projections lead to notably less powerful tests than two-dimensional ones.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge