Joint Flow: Temporal Flow Fields for Multi Person Tracking

Paper and Code

Jul 20, 2018

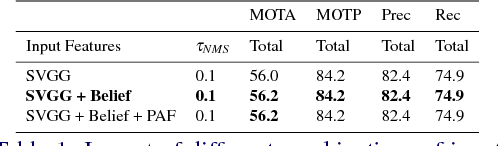

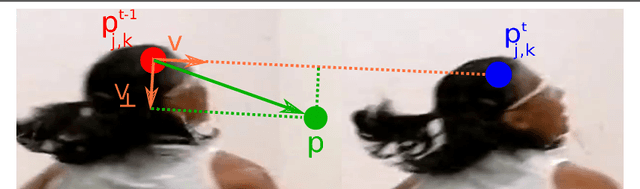

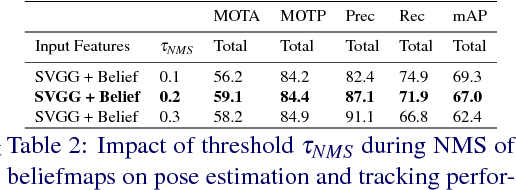

In this work we propose an online multi person pose tracking approach which works on two consecutive frames $I_{t-1}$ and $I_t$. The general formulation of our temporal network allows to rely on any multi person pose estimation approach as spatial network. From the spatial network we extract image features and pose features for both frames. These features serve as input for our temporal model that predicts Temporal Flow Fields (TFF). These TFF are vector fields which indicate the direction in which each body joint is going to move from frame $I_{t-1}$ to frame $I_t$. This novel representation allows to formulate a similarity measure of detected joints. These similarities are used as binary potentials in a bipartite graph optimization problem in order to perform tracking of multiple poses. We show that these TFF can be learned by a relative small CNN network whilst achieving state-of-the-art multi person pose tracking results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge