Job Scheduling on Data Centers with Deep Reinforcement Learning

Paper and Code

Sep 16, 2019

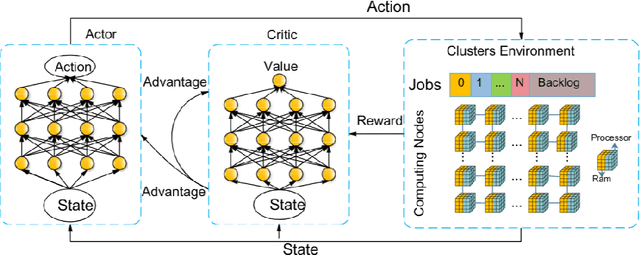

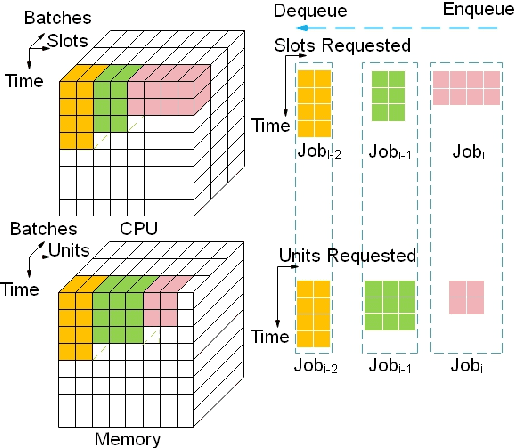

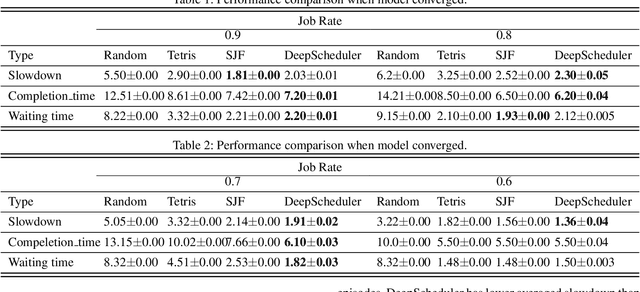

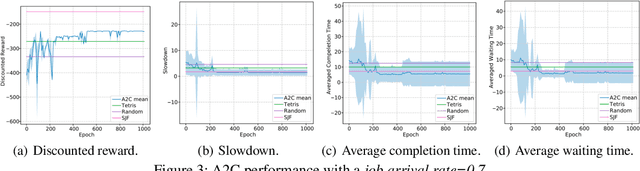

Efficient job scheduling on data centers under heterogeneous complexity is crucial but challenging since it involves the allocation of multi-dimensional resources over time and space. To adapt the complex computing environment in data centers, we proposed an innovative Advantage Actor-Critic (A2C) deep reinforcement learning based approach called DeepScheduler for job scheduling. DeepScheduler consists of two agents, one of which, dubbed the actor, is responsible for learning the scheduling policy automatically and the other one, the critic, reduces the estimation error. Unlike previous policy gradient approaches, DeepScheduler is designed to reduce the gradient estimation variance and to update parameters efficiently. We show that the DeepScheduler can achieve competitive scheduling performance using both simulated workloads and real data collected from an academic data center.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge