Iterative Matching Point

Paper and Code

Oct 23, 2019

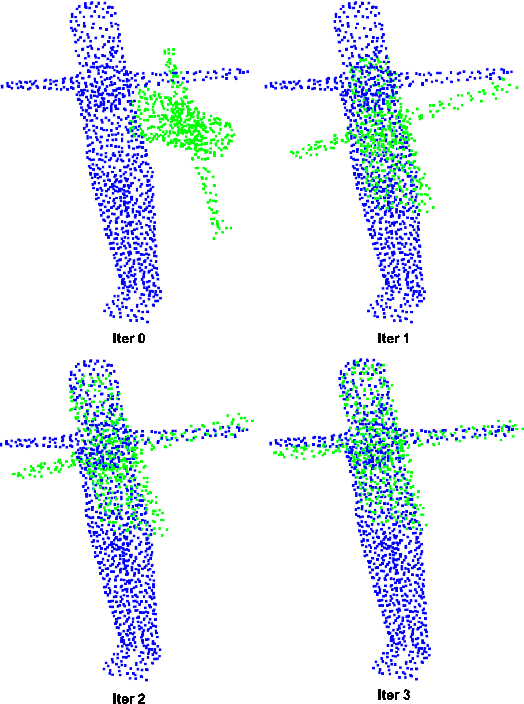

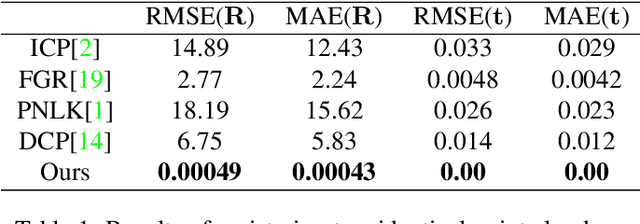

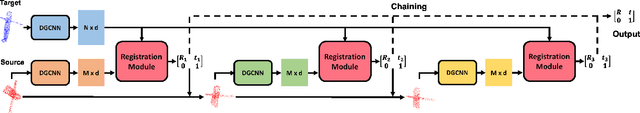

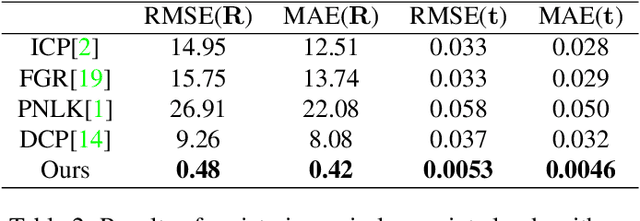

In this paper, we propose a neural network-based point cloud registration method named Iterative Matching Point (IMP). Our model iteratively matches features of points from two point clouds and solve the rigid body motion by minimizing the distance between the matching points. The idea is similar to Iterative Closest Point (ICP), but our model determines correspondences by comparing geometric features instead of just finding the closest point. Thus it does not suffer from the local minima problem and can handle point clouds with large rotation angles. Furthermore, the robustness of the feature extraction network allows IMP to register partial and noisy point clouds. Experiments on the ModelNet40 dataset show that our method outperforms existing point cloud registration method by a large margin, especially when the initial rotation angle is large. Also, its capability generalizes to real world 2.5D data without training on them.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge