Is Face Recognition Safe from Realizable Attacks?

Paper and Code

Oct 15, 2022

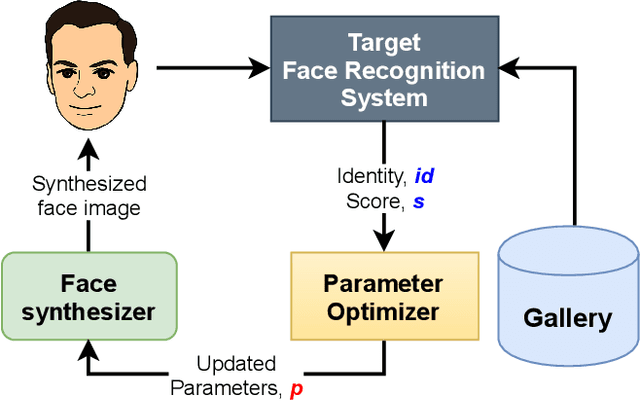

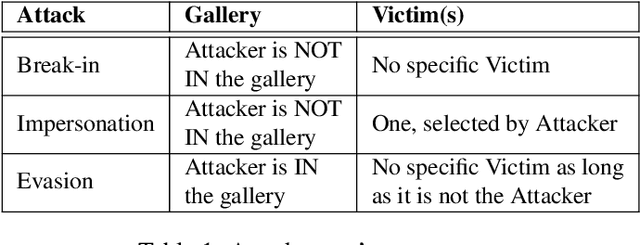

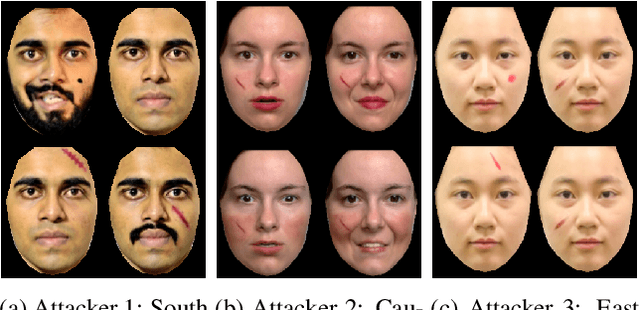

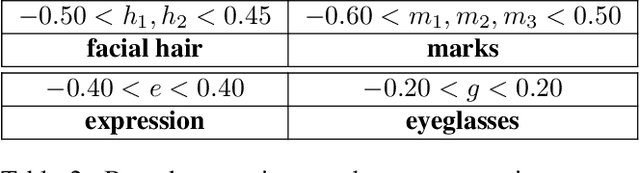

Face recognition is a popular form of biometric authentication and due to its widespread use, attacks have become more common as well. Recent studies show that Face Recognition Systems are vulnerable to attacks and can lead to erroneous identification of faces. Interestingly, most of these attacks are white-box, or they are manipulating facial images in ways that are not physically realizable. In this paper, we propose an attack scheme where the attacker can generate realistic synthesized face images with subtle perturbations and physically realize that onto his face to attack black-box face recognition systems. Comprehensive experiments and analyses show that subtle perturbations realized on attackers face can create successful attacks on state-of-the-art face recognition systems in black-box settings. Our study exposes the underlying vulnerability posed by the Face Recognition Systems against realizable black-box attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge