Irregularly Tabulated MLP for Fast Point Feature Embedding

Paper and Code

Nov 13, 2020

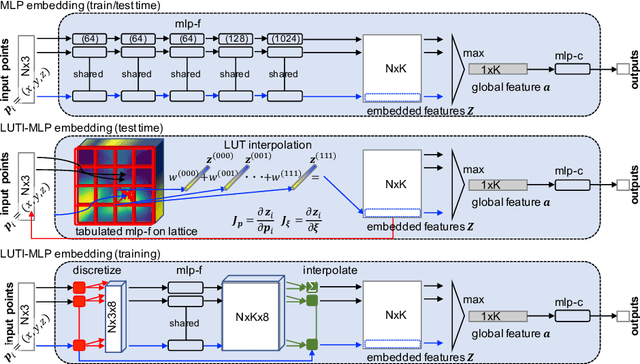

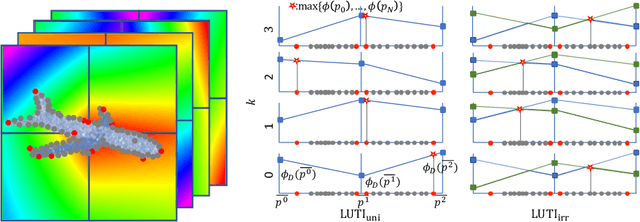

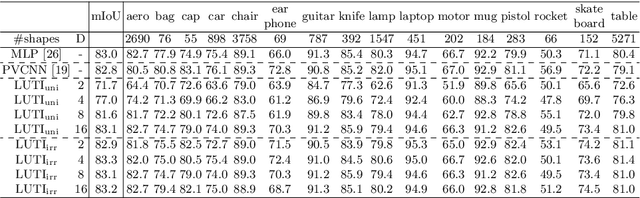

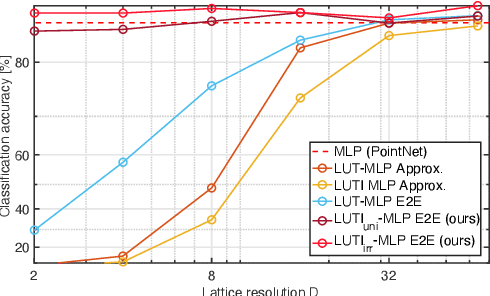

Aiming at drastic speedup for point-feature embeddings at test time, we propose a new framework that uses a pair of multi-layer perceptrons (MLP) and a lookup table (LUT) to transform point-coordinate inputs into high-dimensional features. When compared with PointNet's feature embedding part realized by MLP that requires millions of dot products, the proposed framework at test time requires no such layers of matrix-vector products but requires only looking up the nearest entities from the tabulated MLP followed by interpolation, defined over discrete inputs on a 3D lattice that is substantially arranged irregularly. We call this framework LUTI-MLP: LUT Interpolation ML that provides a way to train end-to-end irregularly tabulated MLP coupled to a LUT in a specific manner without the need for any approximation at test time. LUTI-MLP also provides significant speedup for Jacobian computation of the embedding function wrt global pose coordinate on Lie algebra $\mathfrak{se}(3)$ at test time, which could be used for point-set registration problems. After extensive evaluation using the ModelNet40, we confirmed that the LUTI-MLP even with a small (e.g., $4^3$) lattice yields performance comparable to that of the MLP while achieving significant speedup: $100\times$ for the embedding, $12\times$ for the approximate Jacobian, and $860\times$ for the canonical Jacobian.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge