IrisNet: Deep Learning for Automatic and Real-time Tongue Contour Tracking in Ultrasound Video Data using Peripheral Vision

Paper and Code

Nov 10, 2019

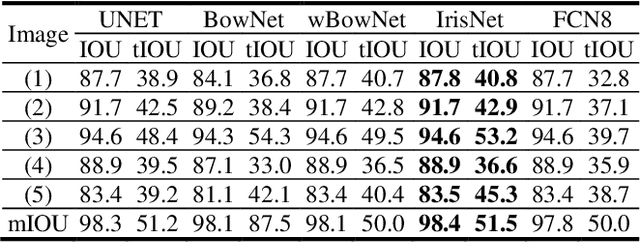

The progress of deep convolutional neural networks has been successfully exploited in various real-time computer vision tasks such as image classification and segmentation. Owing to the development of computational units, availability of digital datasets, and improved performance of deep learning models, fully automatic and accurate tracking of tongue contours in real-time ultrasound data became practical only in recent years. Recent studies have shown that the performance of deep learning techniques is significant in the tracking of ultrasound tongue contours in real-time applications such as pronunciation training using multimodal ultrasound-enhanced approaches. Due to the high correlation between ultrasound tongue datasets, it is feasible to have a general model that accomplishes automatic tongue tracking for almost all datasets. In this paper, we proposed a deep learning model comprises of a convolutional module mimicking the peripheral vision ability of the human eye to handle real-time, accurate, and fully automatic tongue contour tracking tasks, applicable for almost all primary ultrasound tongue datasets. Qualitative and quantitative assessment of IrisNet on different ultrasound tongue datasets and PASCAL VOC2012 revealed its outstanding generalization achievement in compare with similar techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge