Intrinsically Motivated Acquisition of Modular Slow Features for Humanoids in Continuous and Non-Stationary Environments

Paper and Code

Jan 17, 2017

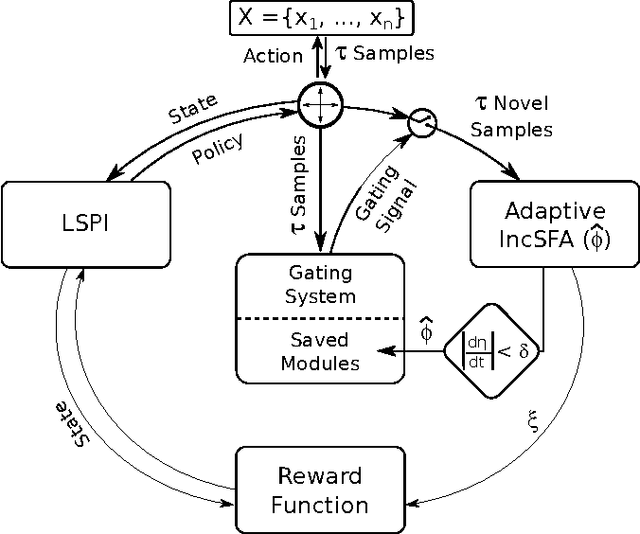

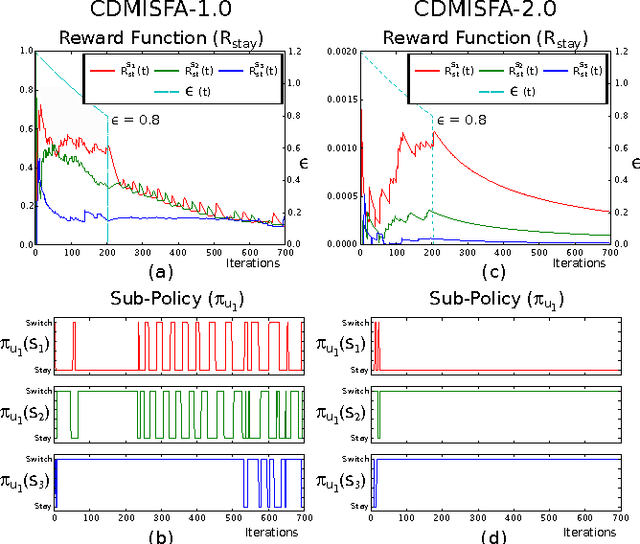

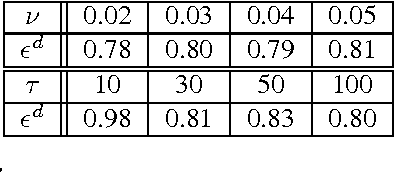

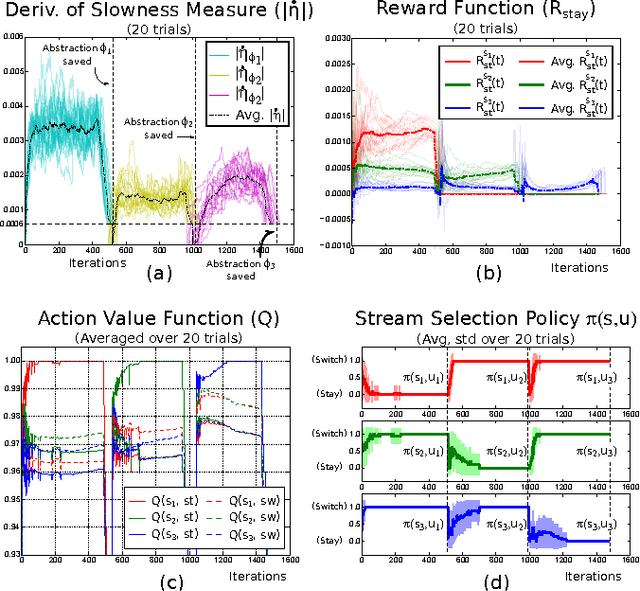

A compact information-rich representation of the environment, also called a feature abstraction, can simplify a robot's task of mapping its raw sensory inputs to useful action sequences. However, in environments that are non-stationary and only partially observable, a single abstraction is probably not sufficient to encode most variations. Therefore, learning multiple sets of spatially or temporally local, modular abstractions of the inputs would be beneficial. How can a robot learn these local abstractions without a teacher? More specifically, how can it decide from where and when to start learning a new abstraction? A recently proposed algorithm called Curious Dr. MISFA addresses this problem. The algorithm is based on two underlying learning principles called artificial curiosity and slowness. The former is used to make the robot self-motivated to explore by rewarding itself whenever it makes progress learning an abstraction; the later is used to update the abstraction by extracting slowly varying components from raw sensory inputs. Curious Dr. MISFA's application is, however, limited to discrete domains constrained by a pre-defined state space and has design limitations that make it unstable in certain situations. This paper presents a significant improvement that is applicable to continuous environments, is computationally less expensive, simpler to use with fewer hyper parameters, and stable in certain non-stationary environments. We demonstrate the efficacy and stability of our method in a vision-based robot simulator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge