Intersection Prediction from Single 360° Image via Deep Detection of Possible Direction of Travel

Paper and Code

Apr 10, 2022

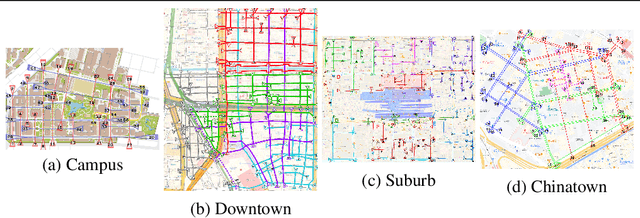

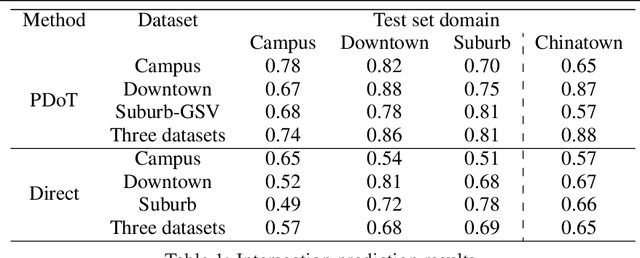

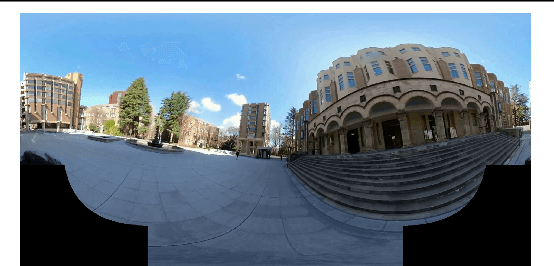

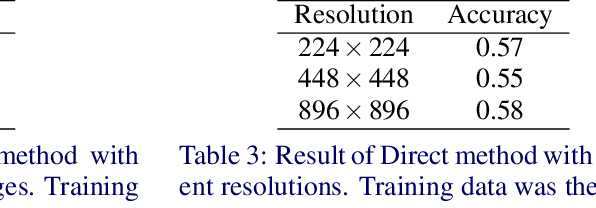

Movie-Map, an interactive first-person-view map that engages the user in a simulated walking experience, comprises short 360{\deg} video segments separated by traffic intersections that are seamlessly connected according to the viewer's direction of travel. However, in wide urban-scale areas with numerous intersecting roads, manual intersection segmentation requires significant human effort. Therefore, automatic identification of intersections from 360{\deg} videos is an important problem for scaling up Movie-Map. In this paper, we propose a novel method that identifies an intersection from individual frames in 360{\deg} videos. Instead of formulating the intersection identification as a standard binary classification task with a 360{\deg} image as input, we identify an intersection based on the number of the possible directions of travel (PDoT) in perspective images projected in eight directions from a single 360{\deg} image detected by the neural network for handling various types of intersections. We constructed a large-scale 360{\deg} Image Intersection Identification (iii360) dataset for training and evaluation where 360{\deg} videos were collected from various areas such as school campus, downtown, suburb, and china town and demonstrate that our PDoT-based method achieves 88\% accuracy, which is significantly better than that achieved by the direct naive binary classification based method. The source codes and a partial dataset will be shared in the community after the paper is published.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge