Integration of Text and Graph-based Features for Detecting Mental Health Disorders from Voice

Paper and Code

May 14, 2022

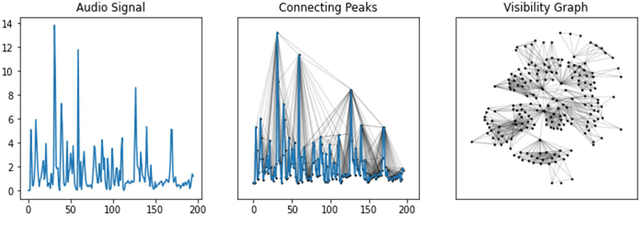

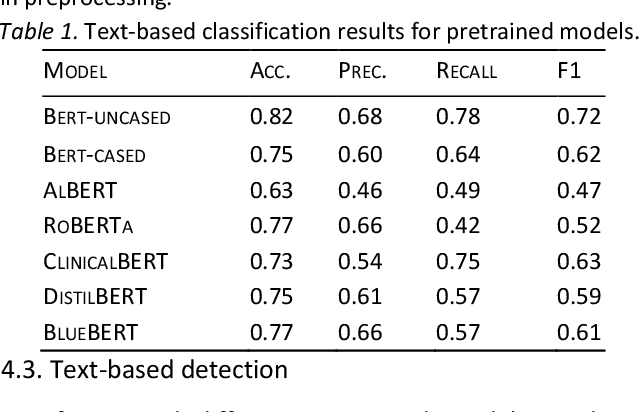

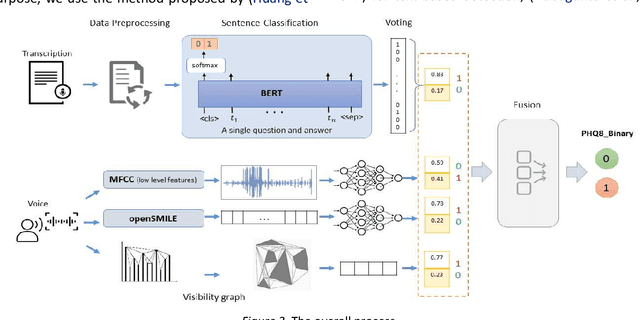

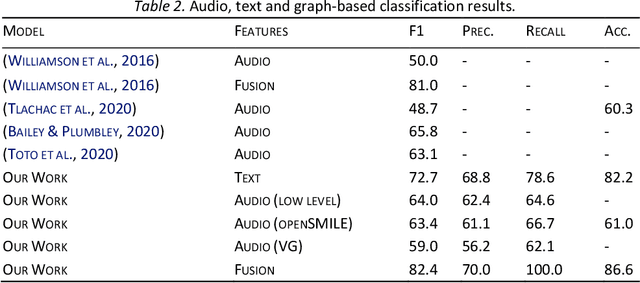

With the availability of voice-enabled devices such as smart phones, mental health disorders could be detected and treated earlier, particularly post-pandemic. The current methods involve extracting features directly from audio signals. In this paper, two methods are used to enrich voice analysis for depression detection: graph transformation of voice signals, and natural language processing of the transcript based on representational learning, fused together to produce final class labels. The results of experiments with the DAIC-WOZ dataset suggest that integration of text-based voice classification and learning from low level and graph-based voice signal features can improve the detection of mental disorders like depression.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge