Integrating Intrinsic and Extrinsic Explainability: The Relevance of Understanding Neural Networks for Human-Robot Interaction

Paper and Code

Oct 09, 2020

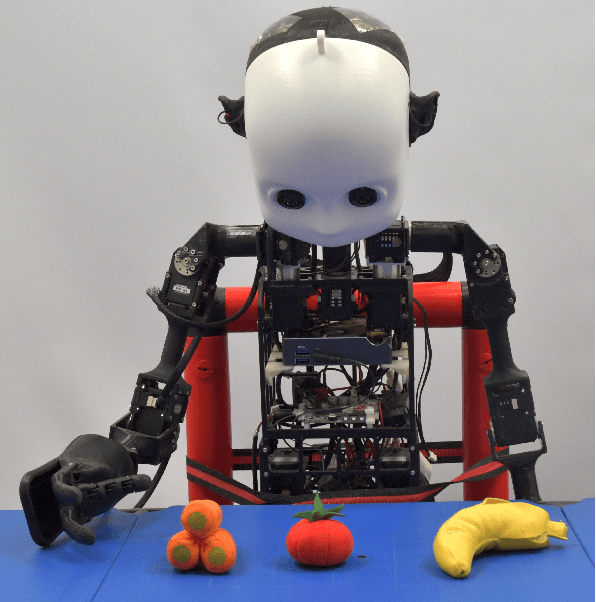

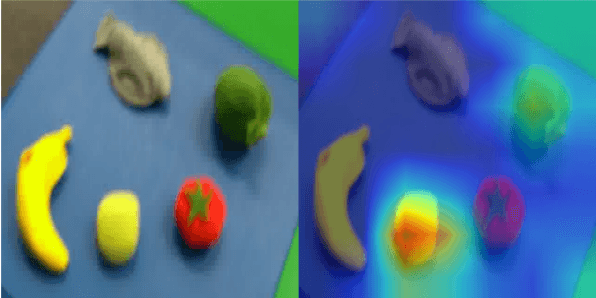

Explainable artificial intelligence (XAI) can help foster trust in and acceptance of intelligent and autonomous systems. Moreover, understanding the motivation for an agent's behavior results in better and more successful collaborations between robots and humans. However, not only can humans benefit from a robot's explanation but the robot itself can also benefit from explanations given to him. Currently, most attention is paid to explaining deep neural networks and black-box models. However, a lot of these approaches are not applicable to humanoid robots. Therefore, in this position paper, current problems with adapting XAI methods to explainable neurorobotics are described. Furthermore, NICO, an open-source humanoid robot platform, is introduced and how the interaction of intrinsic explanations by the robot itself and extrinsic explanations provided by the environment enable efficient robotic behavior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge