Instance-Optimality in the Noisy Value-and Comparison-Model --- Accept, Accept, Strong Accept: Which Papers get in?

Paper and Code

Nov 05, 2018

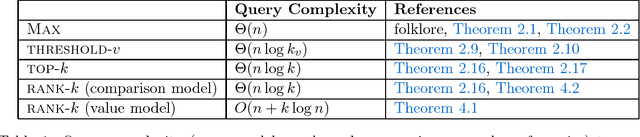

Motivated by crowdsourced computation, peer-grading, and recommendation systems, Braverman, Mao and Weinberg [STOC'16] studied the \emph{query} and \emph{round} complexity of fundamental problems such as finding the maximum (\textsc{max}), finding all elements above a certain value (\textsc{threshold-$v$}) or computing the top$-k$ elements (\textsc{Top}-$k$) in a noisy environment. For example, consider the task of selecting papers for a conference. This task is challenging due the crowdsourcing nature of peer reviews: the results of reviews are noisy and it is necessary to parallelize the review process as much as possible. We study the noisy value model and the noisy comparison model: In the \emph{noisy value model}, a reviewer is asked to evaluate a single element: "What is the value of paper $i$?" (\eg accept). In the \emph{noisy comparison model} (introduced in the seminal work of Feige, Peleg, Raghavan and Upfal [SICOMP'94]) a reviewer is asked to do a pairwise comparison: "Is paper $i$ better than paper $j$?" In this paper, we show optimal worst-case query complexity for the \textsc{max},\textsc{threshold-$v$} and \textsc{Top}-$k$ problems. For \textsc{max} and \textsc{Top}-$k$, we obtain optimal worst-case upper and lower bounds on the round vs query complexity in both models. For \textsc{threshold}-$v$, we obtain optimal query complexity and nearly-optimal round complexity, where $k$ is the size of the output) for both models. We then go beyond the worst-case and address the question of the importance of knowledge of the instance by providing, for a large range of parameters, instance-optimal algorithms with respect to the query complexity. Furthermore, we show that the value model is strictly easier than the comparison model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge