Infra-YOLO: Efficient Neural Network Structure with Model Compression for Real-Time Infrared Small Object Detection

Paper and Code

Aug 14, 2024

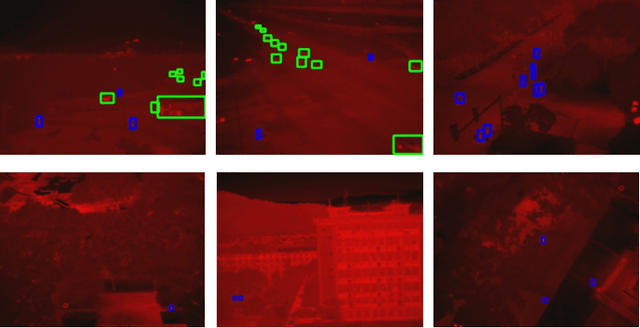

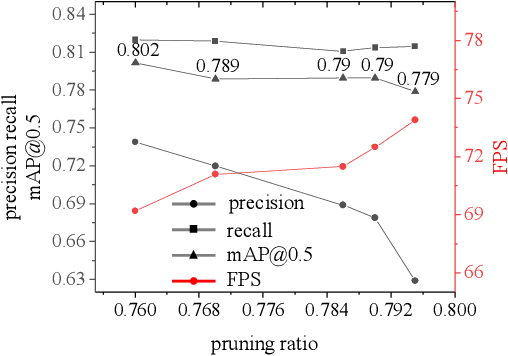

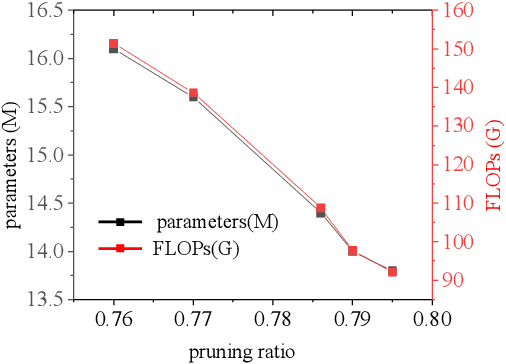

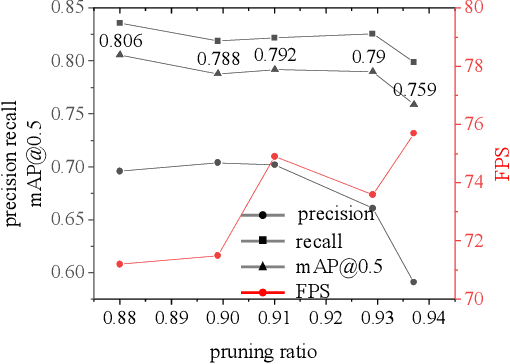

Although convolutional neural networks have made outstanding achievements in visible light target detection, there are still many challenges in infrared small object detection because of the low signal-to-noise ratio, incomplete object structure, and a lack of reliable infrared small object dataset. To resolve limitations of the infrared small object dataset, a new dataset named InfraTiny was constructed, and more than 85% bounding box is less than 32x32 pixels (3218 images and a total of 20,893 bounding boxes). A multi-scale attention mechanism module (MSAM) and a Feature Fusion Augmentation Pyramid Module (FFAFPM) were proposed and deployed onto embedded devices. The MSAM enables the network to obtain scale perception information by acquiring different receptive fields, while the background noise information is suppressed to enhance feature extraction ability. The proposed FFAFPM can enrich semantic information, and enhance the fusion of shallow feature and deep feature, thus false positive results have been significantly reduced. By integrating the proposed methods into the YOLO model, which is named Infra-YOLO, infrared small object detection performance has been improved. Compared to yolov3, mAP@0.5 has been improved by 2.7%; and compared to yolov4, that by 2.5% on the InfraTiny dataset. The proposed Infra-YOLO was also transferred onto the embedded device in the unmanned aerial vehicle (UAV) for real application scenarios, where the channel pruning method is adopted to reduce FLOPs and to achieve a tradeoff between speed and accuracy. Even if the parameters of Infra-YOLO are reduced by 88% with the pruning method, a gain of 0.7% is still achieved on mAP@0.5 compared to yolov3, and a gain of 0.5% compared to yolov4. Experimental results show that the proposed MSAM and FFAFPM method can improve infrared small object detection performance compared with the previous benchmark method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge