Inference on the change point in high dimensional time series models via plug in least squares

Paper and Code

Jul 11, 2020

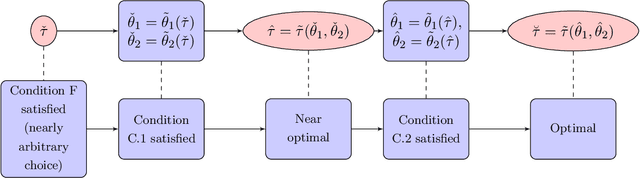

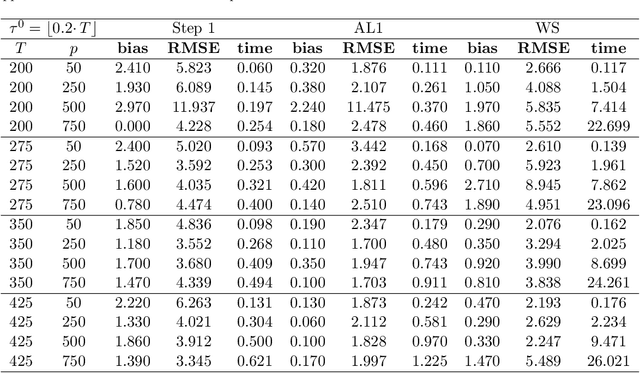

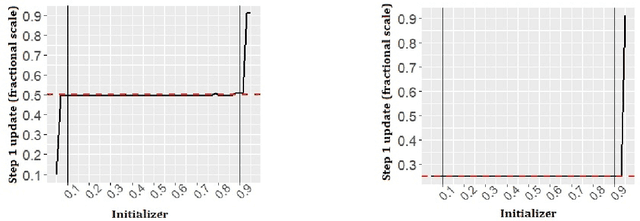

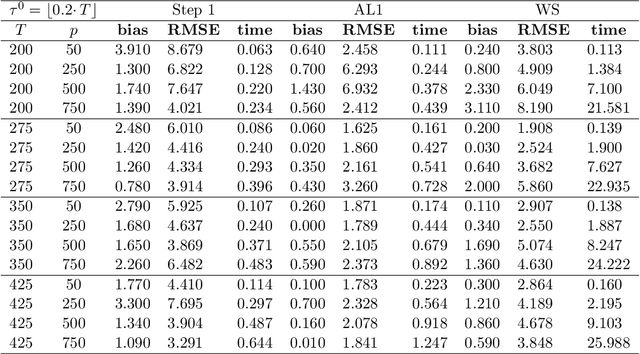

We study a plug in least squares estimator for the change point parameter where change is in the mean of a high dimensional random vector under subgaussian or subexponential distributions. We obtain sufficient conditions under which this estimator possesses sufficient adaptivity against plug in estimates of mean parameters in order to yield an optimal rate of convergence $O_p(\xi^{-2})$ in the integer scale. This rate is preserved while allowing high dimensionality as well as a potentially diminishing jump size $\xi,$ provided $s\log (p\vee T)=o(\surd(Tl_T))$ or $s\log^{3/2}(p\vee T)=o(\surd(Tl_T))$ in the subgaussian and subexponential cases, respectively. Here $s,p,T$ and $l_T$ represent a sparsity parameter, model dimension, sampling period and the separation of the change point from its parametric boundary. Moreover, since the rate of convergence is free of $s,p$ and logarithmic terms of $T,$ it allows the existence of limiting distributions. These distributions are then derived as the {\it argmax} of a two sided negative drift Brownian motion or a two sided negative drift random walk under vanishing and non-vanishing jump size regimes, respectively. Thereby allowing inference of the change point parameter in the high dimensional setting. Feasible algorithms for implementation of the proposed methodology are provided. Theoretical results are supported with monte-carlo simulations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge