Inductive Relation Prediction on Knowledge Graphs

Paper and Code

Nov 16, 2019

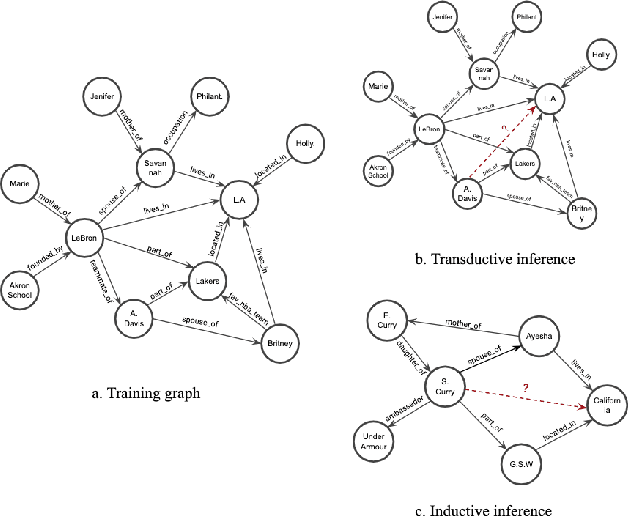

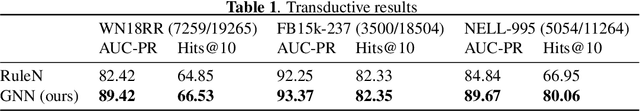

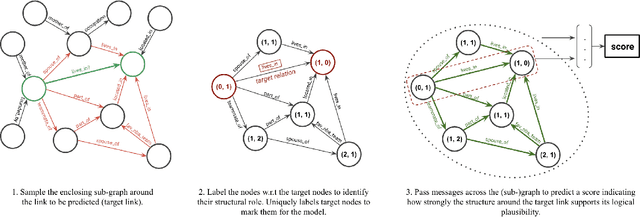

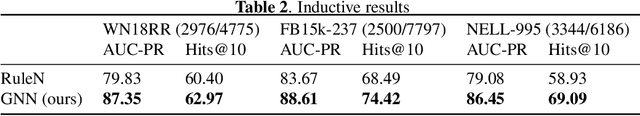

Inferring missing edges in multi-relational knowledge graphs is a fundamental task in statistical relational learning. However, previous work has largely focused on the transductive relation prediction problem, where missing edges must be predicted for a single, fixed graph. In contrast, many real-world situations require relation prediction on dynamic or previously unseen knowledge graphs (e.g., for question answering, dialogue, or e-commerce applications). Here, we develop a novel graph neural network (GNN) architecture to perform inductive relation prediction and provide a systematic comparison between this GNN approach and a strong, rule-based baseline. Our results highlight the significant difficulty of inductive relational learning, compared to the transductive case, and offer a new challenging set of inductive benchmarks for knowledge graph completion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge