Incorporating Structured Sentences with Time-enhanced BERT for Fully-inductive Temporal Relation Prediction

Paper and Code

Apr 10, 2023

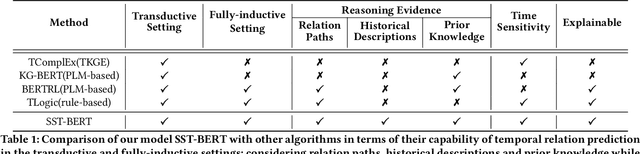

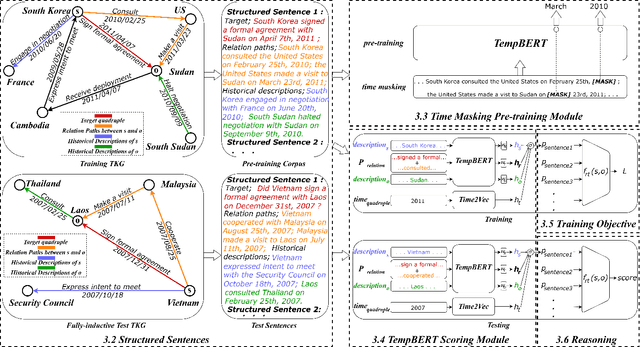

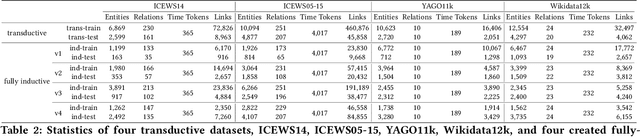

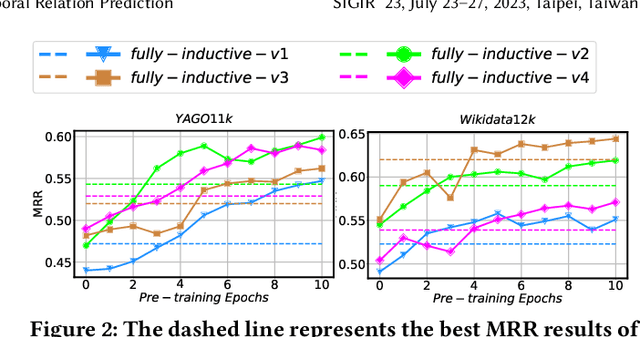

Temporal relation prediction in incomplete temporal knowledge graphs (TKGs) is a popular temporal knowledge graph completion (TKGC) problem in both transductive and inductive settings. Traditional embedding-based TKGC models (TKGE) rely on structured connections and can only handle a fixed set of entities, i.e., the transductive setting. In the inductive setting where test TKGs contain emerging entities, the latest methods are based on symbolic rules or pre-trained language models (PLMs). However, they suffer from being inflexible and not time-specific, respectively. In this work, we extend the fully-inductive setting, where entities in the training and test sets are totally disjoint, into TKGs and take a further step towards a more flexible and time-sensitive temporal relation prediction approach SST-BERT, incorporating Structured Sentences with Time-enhanced BERT. Our model can obtain the entity history and implicitly learn rules in the semantic space by encoding structured sentences, solving the problem of inflexibility. We propose to use a time masking MLM task to pre-train BERT in a corpus rich in temporal tokens specially generated for TKGs, enhancing the time sensitivity of SST-BERT. To compute the probability of occurrence of a target quadruple, we aggregate all its structured sentences from both temporal and semantic perspectives into a score. Experiments on the transductive datasets and newly generated fully-inductive benchmarks show that SST-BERT successfully improves over state-of-the-art baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge