Incorporating GAN for Negative Sampling in Knowledge Representation Learning

Paper and Code

Sep 23, 2018

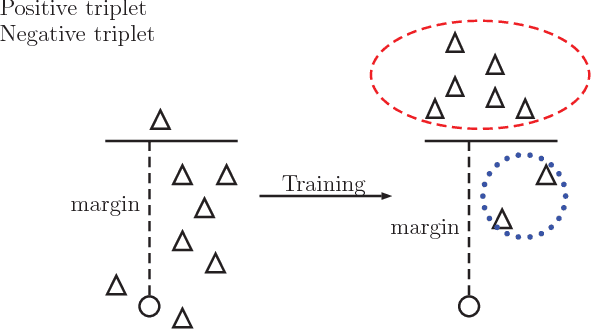

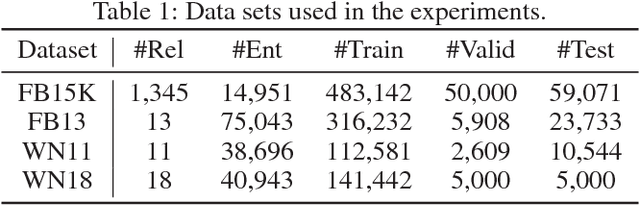

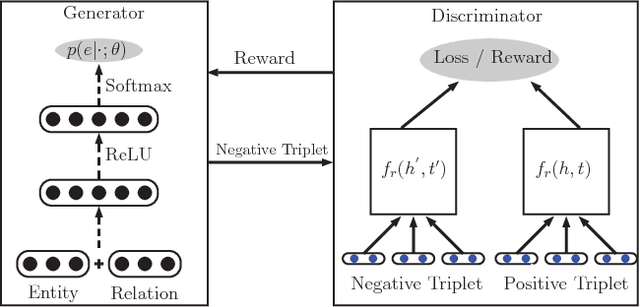

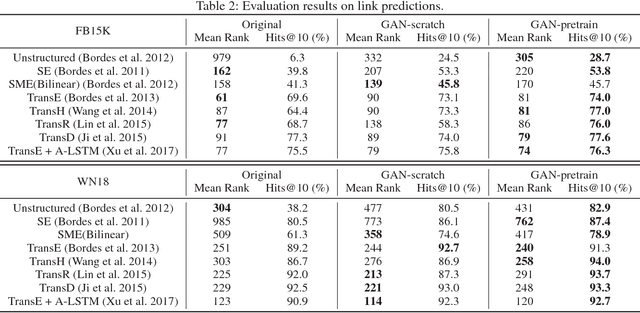

Knowledge representation learning aims at modeling knowledge graph by encoding entities and relations into a low dimensional space. Most of the traditional works for knowledge embedding need negative sampling to minimize a margin-based ranking loss. However, those works construct negative samples through a random mode, by which the samples are often too trivial to fit the model efficiently. In this paper, we propose a novel knowledge representation learning framework based on Generative Adversarial Networks (GAN). In this GAN-based framework, we take advantage of a generator to obtain high-quality negative samples. Meanwhile, the discriminator in GAN learns the embeddings of the entities and relations in knowledge graph. Thus, we can incorporate the proposed GAN-based framework into various traditional models to improve the ability of knowledge representation learning. Experimental results show that our proposed GAN-based framework outperforms baselines on triplets classification and link prediction tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge